Close-to-the-metal architecture handles millions of OPS with predictable single-digit millisecond latencies.

Learn MoreWhat is Apache Cassandra?

Apache Cassandra is an open source NoSQL column store database. Begun as an internal project at Facebook to meet the company’s needs for massive scaling, the code was made open source in 2008. The current development project is managed by the Apache Software Foundation (ASF), and is formally known as Apache Cassandra™.

What is Apache Cassandra Database?

An Apache Cassandra database consists of open source software running on one or more physical or virtual computer servers known as a Cassandra cluster. It also refers to data stored within the database in the formats and accessed over the Internet via query languages and methodologies specified by the Apache Cassandra project. There is an active Apache Cassandra community where users discuss usage and latest developments.

History of the Cassandra Database

Cassandra was born out of the need for a massive, globally-distributed, always-on, highly available database that could scale to the size of modern web applications and social media. Avinash Lakshman, who was co-inventor of Amazon’s Dynamo database collaborated with Prashant Malik to develop the initial internal database, which was first announced to the world in the 2009 Cassandra whitepaper. White it drew from many of Dynamo’s design principles, Cassandra also adopted other features similar to Google’s 2006 Bigtable whitepaper.

Cassandra was designed to handle the “Inbox Search” problem for Facebook. This equated to a system capable of “very high write throughput, billions of writes per day, and also scale with the number of users.” This last number had grown from 1 million Facebook users in 2004 to 100 million users by the time the system went live in June 2008, to over 250 million by the time the Cassandra whitepaper was published in 2009.

Eventually, Facebook replaced Cassandra with HBase, another NoSQL database, for their Inbox Search project, but they continue to use Cassandra in their Instagram division, which supports over 1 billion monthly active users.

While the Bigtable and Dynamo papers were made public, the databases themselves remained behind closed doors at Google and Amazon. Facebook took a different approach. Not only did they publish their whitepaper, they also made its source code publicly available under the auspices of the Apache Software Foundation (apache.org). It was accepted as an incubator project in January of 2009, and graduated the following year in February 2010.

During that period of early adoption, developers such as Rackspace’s Johnathan Ellis and others began to contribute to the project making it a community-led open source software effort (Ellis later became the Apache Cassandra Chair and a co-founder of DataStax).

By the time the Apache Cassandra project had reached the 1.0 release milestone, it had already seen rapid adoption by companies beyond Facebook such as Cisco, Digg, Rackspace, Reddit, Twitter, and elsewhere. By 2012, there were over 1,000 production deployments of the Cassandra database, including by such companies as eBay, Disney, and Netflix.

Since then, the Apache Cassandra project has been upgraded with maintenance releases on a regular basis. Various features have been improved or added over the years. One controversial decision made during its evolution was the adoption of a “Tick-Tock” model for releases starting in 2015. During this period, coinciding with Cassandra 3.0, even-numbered maintenance releases were designated for new features and bug fixes, and odd-numbered releases were to ensure code stability. However, this release model was broadly rejected, and the scheme abandoned in 2017, coinciding with Cassandra 3.10.

In 2019, the project saw increased focus on cloud-native capabilities and improved containerization support. The community also began work on what would become Cassandra 4.0, which represented one of the most extensively tested releases in the project’s history. The release of Cassandra 4.0 in July 2021 marked a major milestone, introducing zero-copy streaming, audit logging capabilities, and Java 11 support. This version was notable for its emphasis on stability and reliability, with over 1,000 bug fixes and the most extensive testing of any release to date.

March 2023 saw the release of Cassandra 4.1, which introduced storage-attached indexing (SAI) and enhanced virtual tables. This version also added native vector search capabilities, positioning Cassandra for machine learning and AI workloads.

In January 2024, Cassandra 5.0 was released, bringing full Java 17 support and performance improvements as well as vectorized query execution. The project continues to see widespread adoption, with companies like Apple and Netflix using it to handle massive-scale data workloads and also contributing to the project. Cassandra remains a popular NoSQL databases, particularly for applications requiring high availability and global scale.

As of 2025, Cassandra has risen to one of the top fifteen most broadly accepted database solutions in the world, as per the DB-Engines.com ranking.

Apache Cassandra Release History

Apache Cassandra Versions:

1.0 – October 2011 – Performance improvements, compression, hinted handoffs

2.0 – September 2013 – Lightweight transactions based on the Paxos consensus protocol

3.0 – November 2015 – Added Materialized Views (later made experimental); adopted (then later abandoned) the “tick-tock” model for release.

4.0 – July 2021 – Zero-copy streaming, Java 11 support, incremental repairs

4.1 – March 2023 – Audit logging, storage-attached indexing (SAI), improved virtual tables, vector search capabilities

5.0 – January 2024 – Full Java 17 support, improved memory management, vectorized query execution, enhanced security features

Cassandra Database Overview

Cassandra Database Design Principles

Cassandra was designed to support high throughput and be horizontally scalable, “Cassandra aims to run on top of an infrastructure of hundreds of nodes… designed to run on cheap commodity hardware and handle high write throughput while not sacrificing read efficiency.” Furthermore, the Cassandra whitepaper identified the need for global data distribution: “Since users are served from data centers that are geographically distributed, being able to replicate data across data centers was key to keep search latencies down.”

Part of its design assumptions included handling failure. Failure was not just a stochastic possibility, it was a constant issue stemming from continuous growth: “Dealing with failures in an infrastructure comprised of thousands of components is our standard mode of operation… As such, the software systems need to be constructed in a manner that treats failures as a norm rather than an exception.”

Another key Cassandra design principle was to be able to auto-partition the data to scale incrementally. The system would be able to add new nodes and rebalance data across the entire cluster. (As opposed to the other then-common practice of manually partitioning, or sharding, data.)

In order to make the system highly available, and to ensure data remained durable, Cassandra implemented automated peer-to-peer replication. With multiple copies of the same data stored across nodes (data duplication), the loss of a few nodes would mean that data would still survive. Also, being peer-to-peer meant that no one node could be knocked offline to make the system unavailable. Each transaction used a different coordinator node, so there was no single point of failure.

Clusters could be defined as “rack aware” or “datacenter aware” so that data replicas could be distributed in a way that it could even survive physical outages of underlying infrastructure.

Additional Cassandra scalability features that were built in from the start included how to manage membership of nodes in the cluster, how to bootstrap nodes, and how to autoscale clusters up or down.

Cassandra Data Storage Format

A key Cassandra feature also involved how it stored data. Rather than constantly altering large monolithic, mutable (alterable) data files, the system relied upon writing files to disk in an immutable (unalterable) state. If data changed for a particular entry in the database, the change would be written to a new immutable file instead. Automatic system processes triggered by periodic, size or modification rate of the files, would gather a number of these immutable files together (each of which may have redundant or obsolete data) and write out a new single composite table file of the most current data — a process known as compaction. The format of these immutable data files are known as a Sorted Strings Tables, or SSTables.

Since Cassandra spreads out data across multiple nodes, and multiple SSTable files per node, the system would need to understand where a particular record could be found. To do so, it used a theoretical ring architecture to distribute ranges of data across nodes. And, within a node, it used Bloom filters to determine which SSTable in particular held the specific data being queried.

Learn Cassandra data modeling stratgies from Discord’s Bo Ingram in this free on-demand masterclass

Cassandra Query Language (CQL)

Data access in Cassandra also required an API. The original API consisted of only three methods: insert, get and delete. Over time, these basic queries were expanded upon. The resultant API was, in time, called Cassandra Query Language (CQL). CQL appears in many ways like the ANSI Structured Query Language (SQL) used for Relational Database Management Systems (RDBMS), but CQL lacks several SQL’s specific features, such as being able to do JOINs across multiple tables. There are some commands that would be equally valid across CQL and SQL. On the other hand, there are dissimilar capabilities between the two query languages. Thus, even though CQL and SQL bear a great deal of similarity, Cassandra is formally classified as a NoSQL database.

There is also an older query interface for Cassandra known as Apache Thrift which was deprecated with the release of Cassandra 4.0.

NoSQL Data Models

Cassandra, by itself, natively focuses upon two different NoSQL data models:

- Wide Column Store – Cassandra is primarily identified as a “wide column store.” This sort of database is particularly adept at handling aggregate functions quickly and is more efficient when dealing with sparse data (where the database may have only a few values set for many possible rows and columns).

- Key Value – Cassandra also readily serves as a key-value store.

Additional NoSQL Data Models

Cassandra can support additional data models with extensions:

- Graph – Cassandra can use various extensions or additional packages, such as through the Linux Foundation’s JanusGraph, to serve as a graph database, which keeps track of interrelationships between entities through edges and vertices.

- Time series – Cassandra can work as a passable time series database (TSDB) by itself, but it can also be made more robust for this use case with open source packages such as KairosDB.

Masterclass: Data Modeling for NoSQL Databases

Looking for extensive training on about data modeling for NoSQL Databases? Our expertsoffer a 3-hour masterclass that assists practitioners wanting to migrate from SQL to NoSQL or advance their understanding of NoSQL data modeling. This free, self-paced class covers techniques and best practices on NoSQL data modeling that will help you steer clear of mistakes that could inconvenience any engineering team.

Cassandra: CAP Theorem

The CAP Theorem (as put forth in a presentation by Eric Brewer in 2000) stated that distributed shared-data systems had three properties but systems could only choose to adhere to two of those properties:

- Consistency

- Availability

- Partition-tolerance

Distributed systems designed for fault tolerance are not much use if they cannot operate in a partitioned state (a state where one or more nodes are unreachable). Thus, partition-tolerance is always a requirement, so the two basic modes that most systems use are either Availability-Partition-tolerant (“AP”) or Consistency-Partition-tolerant (“CP”).

An “AP”-oriented database remains available even if it was partitioned in some way, such as if one or more nodes went down, or two or more parts of the cluster were separated (a so-called “split-brain” situation). The system would continue to accept new data (writes). However, its data may become inconsistent across the cluster during the partitioned state. Such inconsistencies would need to be fixed later through anti-entropy methods (such as repairs, hinted handoffs, and so on).

A “CP”-oriented database would instead err on the side of consistency in the case of a partition, even if it meant that the database became unavailable in order to maintain its consistency. For example, a database for a bank might disallow transactions to prevent it from becoming inconsistent and allowing withdrawals of more money than were actually available in an account.

Cassandra was designed to operate originally as an “AP”-mode database. However, it is not as simple as that. Cassandra supports “tunable” consistency, meaning that each different database transaction can have a different consistency level, including requiring quorum (where a majority, or n/2+1 of the nodes agree) or for all replicas to return the same data.

Cassandra also supports Paxos, a consensus algorithm that utilizes lightweight transactions (LWT). With this feature, Cassandra added a serial consensus, both for the local cluster as well as for cross-datacenter transactions. This allows linearizability of writes.

With lightweight transactions and a consensus protocol like Paxos, Cassandra can operate somewhat similar to a “CP”-mode database. However, even with these “CP”-oriented features for consistency, it remains classified as an “AP”-mode database.

Cassandra, ACID and BASE

Cassandra is not a fully ACID-style database, since it does not support strict consistency in the ACID-sense such as two-phase commits, rollbacks or locking mechanisms. While it does support other ACID-like features, such as strong consistency (using CL=ALL), compare-and-set updates with Lightweight Transactions, atomicity and isolation on the row-level, and has a durable writes option, it is inaccurate to describe Cassandra as an ACID-compliant database.

Instead, furthering the analogy of comparative pH levels, Cassandra has been described as a BASE database, since it offers Basic Availability, Soft state, and Eventual consistency.

Cassandra Cluster Scalability and High Availability

Given that Apache Cassandra features were architected with scalability foremost in mind, Cassandra is capable to scale to a theoretically unlimited number of nodes in a cluster, and clusters can be geographically dispersed, with data exchanged between clusters using multi-datacenter replication.

In Cassandra a node is either a whole physical server, or an allocated portion of a physical server in a virtualized or containerized environment. Each node will have requisite processing power (CPUs), memory (RAM), and storage (usually, in current servers, in the form of solid-state drives, known as SSDs).

These nodes are organized into clusters. Clusters can be in physical proximity (such as in the same datacenter), or can be disbursed over great geographical distances. To organize clusters into datacenters, and then also across different racks (to ensure high availability), Cassandra uses a snitch monitor.

Once deployed Cassandra uses a mechanism called multi-datacenter replication to ensure data is transferred and eventually synchronized between clusters. Note that two clusters could be installed side-by-side in the same datacenter yet employ this mechanism to transfer data between the clusters.

Within a Cassandra cluster, there is no central primary (or master) node. All nodes are peers. There are mechanisms, such as the Gossip protocol to determine when the cluster is first started for nodes to discover each other. Once the topology is established, however, it is not static. This same Gossip mechanism helps to determine when additional nodes are added, or when nodes are removed (either through purposeful decommissioning or through temporary unavailability or catastrophic outages). (Read more here about Cassandra’s use of Gossip.)

During such topology changes, Cassandra uses a mechanism to redistribute data across the cluster. Let’s look more into how Cassandra distributes data across the cluster.

Apache Cassandra Data Model: Keyspaces, Columns, Rows, Partitions, Tokens

Within a Cassandra cluster, you can have one or more keyspaces. The keyspace is the largest “container” for data within Cassandra. Creating a keyspace in Cassandra CQL is roughly equivalent to CREATE DATABASE in SQL. You can have multiple keyspaces inside the same Cassandra cluster, but creating too many separate keyspaces can make the cluster less performant. Also be careful about naming your keyspaces, because the names cannot be altered thereafter (you would need to migrate all your data into a differently-named keyspace).

Data is then defined based on the different APIs used to access Cassandra. Under the older Thrift API, data was defined as a column family. However, given that Thrift was deprecated in Cassandra 4.0, it is more important to consider how data is defined as tables in CQL.

Tables in CQL are somewhat similar to those in ANSI SQL, with columns and rows. They can be modified (adding or removing columns, for instance), but like keyspaces, tables cannot be renamed. You can have multiple tables per keyspace.

Unlike SQL, though, not every row will appear in every column. For example, for a user, you may or may not have their first and last name. You may or may not have their mobile phone number, their email address, their date of birth, or other specific elements of data. For example, for a user, you might just have their first name, “Bob,” and their phone number. The Cassandra data model is therefore more efficient to handle sparse data.

While some people might say this means Cassandra is schemaless, or only supports unstructured data, these assertions are technically imprecise and incorrect. Apache Cassandra does have a schema and structure within each table in a keyspace.

Within a table, you organize data into various partitions. Partitions should be defined based on the query patterns expected against the data (writes, reads and updates), to even balance transactions as evenly as possible. Otherwise, frequently-requested data would make for “hot partitions” since they might be queried (written to or read from) so often. Frequently-written-to partitions can also turn into “large partitions,” potentially growing to a gigabyte or more of data, which can make the database less performant.

Finally, once a partitioning scheme is determined for the database, the Murmur 3 hashing function is used by default to turn the primary keys into partition keys represented as tokens. These tokens are distributed to the different nodes in the cluster using Cassandra’s data distribution and replication mechanisms across its ring architecture.

Cassandra Ring Architecture for Data Distribution

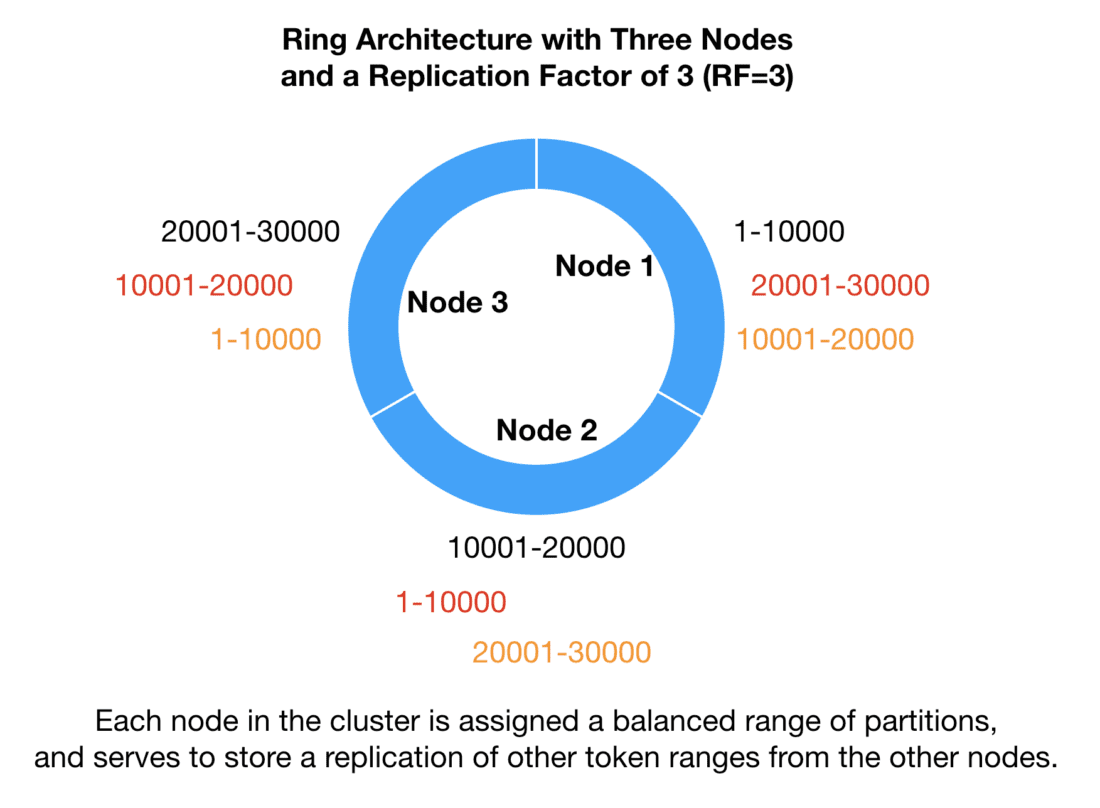

For the Cassandra database model to be a highly-available distributed database, it needs mechanisms to distribute data, queries and transactions across its constituent nodes. As noted, it uses the Murmur 3 partitioning function to distribute data as evenly as possible across the nodes. Say you had three nodes and 30,000 partitions of data. Each node would be assigned 10,000 partitions.

However, to be fault tolerant, there are multiple nodes where data can be found. The number of times a piece of data will be copied within a Cassandra cluster is based on the Replication Factor (RF) assigned to the keyspace. If you set RF to 2, there would be two copies of every piece of data in the database. Many Cassandra clusters are set up with an RF of 3, where each piece of data was written to three different nodes, to ensure that if you can get a quorum, or majority, query across the nodes to agree for better and more reliable data consistency.

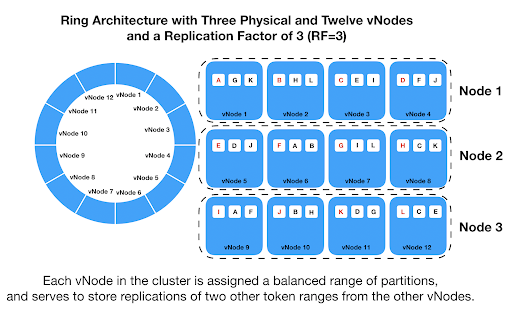

For more granular and finely balanced data distribution, a Cassandra node is further abstracted into Virtual Nodes (vNodes). This mechanism is designed to ensure that data is more evenly distributed across and within nodes. For example, our three node cluster could be split into 12 vNodes. Each physical node would have a number of vNodes, each responsible for a different range of partitions, as well as keeping replicas of other partitions from two other vNodes.

The Cassandra partitioning scheme is also topology aware, so that no single physical node contains all replica copies of a particular piece of data. The system will try to ensure that the partition range replicas in vNodes are all distributed to be stored on different machines. This high availability feature is to maximize the likelihood that even if there are a few nodes down the data remains available in the database.

Note that the vNode architecture has proven restrictive in practice. Its static data distribution negatively impacts elasticity. Read how a “tablets”-based architecture enables faster scaling.

Apache Cassandra Architecture

Apache Cassandra’s architecture is distributed across numerous commodity servers. It is a NoSQL database engineered to offer high availability and fault tolerance, capable of managing large volumes of data effectively.

Apache Cassandra operates as a peer-to-peer distributed system where all nodes in the cluster are equal and communicate directly, eliminating the need for a centralized coordinator and avoiding a single point of failure. Data is distributed across the cluster in a ring, with each node responsible for a specific range determined by a hashing algorithm. Cassandra replicates data across multiple nodes, which can be configured within a single data center or across multiple sites. It employs a flexible, schema-optional partitioned row store data model, organizing data into rows based on a partition key, which may be a subset or the entirety of the primary key if it is simple.

To support fast read and write operations, Cassandra uses a distributed commit log and memtable for writes and performs distributed reads across replicas. Additionally, the gossip protocol enables internode communication, allowing nodes to discover and share information about the cluster’s state and network topology.

Read more about Apache Cassandra Architecture.

Apache Cassandra Storage and Memory

The write path in Cassandra goes first goes to an in-memory structure called the memtable and is also committed to a persistent commit log.

Once committed, that data will continue to be held in the memtable. Periodically a collection of updates in the memtable will be flushed and written to a Sorted Strings Table (SSTable). The SSTable is usually written to persistent storage, but for fastest performance it can also reside in-memory (in RAM).

Whereas many databases have files that are constantly changing with each new write (“mutable” files), Cassandra SSTable files are immutable — they do not change once written. Apache Cassandra writes these immutable files in a Log Structured Merge tree (LSM tree) format. If a record needs to be updated, it is written to a new file instead. If a record needs to be deleted, the immutable tables are not altered, but instead an artifact called a tombstone is written to a new SSTable, a marker that the original record is to be deleted, and also, if a query comes in for that data, it should not appear in any returned result.

Over time a number of separate SSTable files are merged together through a process known as compaction, where only the latest version of each data record is written to the new SSTable. Any data associated with a tombstone is not written to the new SSTable. Compaction can either be automatic (the system will periodically compact files), or it can be triggered by a user operator.

Bloom Filter in Cassandra

The read path for Cassandra goes through the memtable first, then to a row cache (if it was enabled), a Bloom filter (which tells the system if the data will be found in storage), a partition key cache, and only then does it check for the data on disk.

Since the memtable and caches are stored in RAM, these reads happen very quickly. SSTable lookups can vary in time, depending on whether the SSTable is stored in-memory, on solid state drives (SSDs), or on traditional spinning hard disk drives (HDDs). In-memory storage is fastest, but also the most-expensive option. Hard disk drives are the slowest, but also the least-expensive option. Solid state drives provide a middle-ground in terms of performance and affordability.

The role of the Bloom Filter in the read path is to avoid having to check every SSTable on the node to figure out in which file particular data exists. A Bloom Filter is a probabilistic mechanism to say that either the data does not exist in that file, or that it probably does exist in a file; false positives are possible, but false negatives are not. The Bloom Filter resides in memory, like the memtable and caches, but it exists offheap.

Cassandra Garbage Collection

Apache Cassandra is built on Java, and runs in the Java Virtual Machine (JVM). Summarily, Cassandra relies on JVM Garbage Collection, or JVM GC, in order to occasionally identify and reclaim unused memory so there is always enough space to allocate new objects. Java GC performance is, however, contingent on the accuracy of tuning based on your specific Apache Cassandra application node cluster architecture.

Cassandra GC tuning is essential to maintain low latency and high throughput. Engineers want the JVM garbage collector to run often enough so there is always space in RAM to allocate new objects to, but not so often that the JVM heap size affects node performance. Specific tuning varies greatly depending on the types of queries and transactional loads running in a Cassandra database. According to The Last Pickle, properly tuned clusters can exhibit a 5-10x improvement in latency and throughput when correctly tuning the JVM, reducing costs dramatically. Conversely, a modern NoSQL database architecture built on C++ like ScyllaDB is designed to be self-tuning while avoiding the wasteful garbage collection in Cassandra.

Apache Cassandra Ecosystem

Because Cassandra is currently one of the most popular databases in the world, there are many integrations made for it to interoperate with other open source and commercial big data projects.

Some of these include the above-mentioned integrations to adapt Cassandra to support new data models and use cases, such as time series data (KairosDB) or graph database models (JanusGraph). Others are for integration with streaming data solutions like Apache Kafka, or Lightbend’s Akka toolkit.

There are also Cassandra integrations with big data analytics systems. Some good examples of this include pairing Cassandra with Apache Spark, Hadoop (Pig/Hive), search engines (such as Solr or Elasticsearch), and fast in-memory deployment solutions (Apache Ignite).

As well, there are various tools and technologies to virtualize, deploy and manage Cassandra, with implementations for Docker, Kubernetes, and Mesosphere DC/OS among others.

Apache Cassandra, being open source, has also been implemented within many products and services as an underlying database. For example, VMWare’s vCloud Director.

For application developers, the broad popularity of Cassandra has meant that there are clients written to connect to it from many different programming languages including:

|

|

|

Apache Cassandra Capabilities: Strengths and Weaknesses

Apache Cassandra is typically used when you need to rapidly prototype and deploy a key-value store database that scales to multiple gigabytes or terabytes of information — what are often referred to as “big data” applications. It is also used for “always on” use cases with high volume transactional requests (reads and writes).

It is most appropriate for wide column, key-value and time series data models. It can also be used as an underlying storage layer for a graph database, but only through additional packages such as JanusGraph.

On the other hand, Apache Cassandra is inappropriate for small data sets (smaller than, say, dozens of gigabytes), when database scalability or availability are not vital issues, or when there are relatively low amounts of transactions.

It is also important to remember Apache Cassandra is an open source NoSQL database that uses its own CQL query language, so it should be used when data models do not require normalized data with JOINs across tables which are more appropriate for SQL RDBMS systems.

[See how Cassandra compares to ScyllaDB, a popular Cassandra alternative]

Apache Cassandra Variants and Alternatives

Cassandra has spawned a number of databases that extend Cassandra capabilities, or work using its APIs.

- ScyllaDB — a Cassandra alternative rewritten completely in C++ providing additional features. Has both an open source and commercial variant. Also has a commercial cloud-hosted version.

- Datastax Enterprise — a commercialized variant of Cassandra that provides additional features. Also supports a commercial cloud-hosted version.

- Microsoft Cosmos DB — a commercialized, cloud-hosted database that supports a Cassandra CQL-compatible API.

- Amazon Keyspaces (for Apache Cassandra) — a hybrid of Apache Cassandra for its CQL interface and DynamoDB for scalability. Formerly known as Amazon Managed Cassandra Service. (Read more.)

- Yugabyte — an open-source database that supports a Cassandra CQL-compatible API. Also has a commercial cloud-hosted version.

Apache Cassandra Migration

There are multiple steps in a Cassandra migration strategy for moving data to an eventually consistent database such as ScyllaDB, the fastest NoSQL Database, and verifying its consistency for a low latency, high volume application. If you are migrating from Cassandra to ScyllaDB, the steps look like this (at a high level):

- Duplicating the Apache Cassandra schema in ScyllaDB, although some variation is permissible

- Configuring the application/s to read only from Apache Cassandra while performing dual writes

- Taking a snapshot of all data to-be-migrated from existing clusters in Apache Cassandra

- Using the ScyllaDB sstableloader tool + Data validation to load SSTable files to ScyllaDB

- Conduct verification, with dual writes and reads, logging mismatches, until the system reaches the minimal data mismatch threshold

- Finally, in the last step the Apache Cassandra migration is complete, the Cassandra db reaches the end of its life, and only ScyllaDB reads and writes

Note that steps 2 and 5 are only required for live migration with no downtime and ongoing traffic.

There are several ways to handle failed Apache Cassandra schema migrations.

If sstableloader fails, it is necessary to repeat the loading job, because each loading job is per keyspace/table_name. Reloading the same data which was loaded in part before the failure, any duplication issues are eliminated by compactions.

If a node in the Apache Cassandra database fails, there are two possibilities. If the failed node was one you were using to load SSTables, you will also experience failure of the sstableloader. This will mean repeating the loading job, as explained above.

But if you were using RF>1, the data exists on another node or nodes. Simply redirect away from the failed node’s IP address and continue sstable loading from the other nodes, after which all the data should be on ScyllaDB.

If a ScyllaDB node fails and it was the target of sstables, again, the sstableloader will also fail. In that case, restart the loading job with a different target ScyllaDB node.

To rollback Cassandra DB migrations and start over again, run the following commands:

- Stop dual writes to ScyllaDB

- Use this command: [sudo systemctl stop scylla-server] to stop ScyllaDB service

- For all data already loaded to ScyllaDB, use [cqlsh] to perform [truncate]

- Restart dual writes to ScyllaDB

- Capture a new snapshot of all Cassandra nodes

- From the NEW snapshot folder, start loading SSTables to ScyllaDB again

To learn more about how the ScyllaDB architecture is architecturally different than Cassandra and why this matters, read this white paper: Building the Monstrously Fast and Scalable NoSQL Database: Eight Design Principles behind ScyllaDB