When choosing a database solution it’s important to make sure it can scale to your business needs and provide fault tolerance and high availability. ScyllaDB incorporates many features of Apache Cassandra’s scale-out design, including distributed workload and storage along with eventual consistency. In this post, we will explore how fault tolerance works and the high availability of ScyllaDB’s architecture. This blog is meant for new users of ScyllaDB. Advanced users are probably already familiar with these basic concepts (By the way, advanced users are also encouraged to nominate themselves for our ScyllaDB User Awards).

What happens if a catastrophe occurs in or between your data centers? What if a node goes down or becomes unreachable for any reason? ScyllaDB’s fault tolerance features significantly mitigate the potential for catastrophe. To get the best fault tolerance out of ScyllaDB, you’ll need to understand how to select the right fault tolerance strategy, which includes setting a Replication Factor (the number of nodes that contain a copy of the data) for your keyspaces and choosing the right Consistency Level (the number of nodes that must respond to read or write operations).

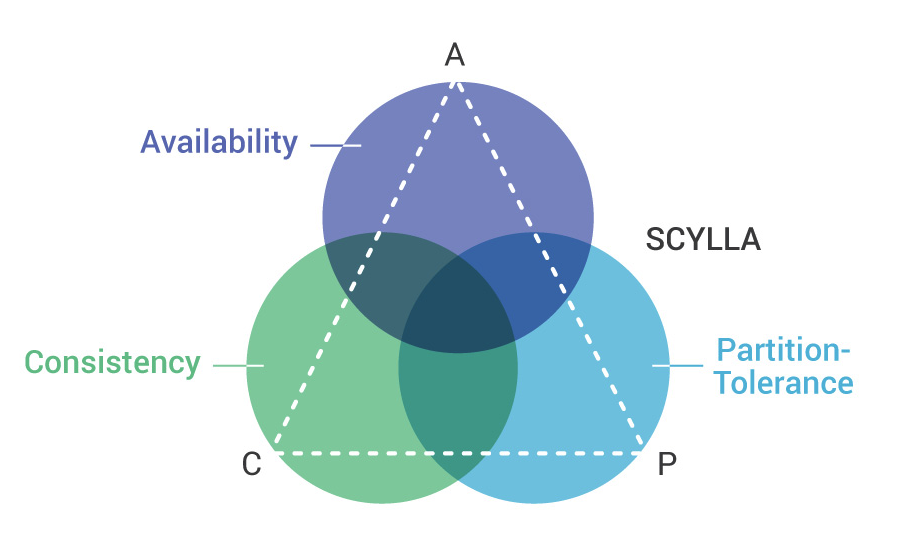

Like many distributed database systems, ScyllaDB adheres to the CAP Theorem: In a distributed system, consistency, availability, and partition tolerance of data are mutually dependent. Increasing (or decreasing) any 2 of these factors will inversely affect the third.

ScyllaDB adheres to the CAP Theorem in the following way:

ScyllaDB chooses availability and partition tolerance over consistency, such that:

- It’s impossible to be both consistent and highly available during a network partition

- If we sacrifice consistency, we can be highly available

Specifying a replication factor (RF) when setting up your ScyllaDB keyspaces ensures that your keyspace is replicated to the number of nodes you specify. Since this affects performance and latency, your consistency level (CL) – tunable for each read and write query – lets you incrementally adjust how many read or write acknowledgments your operation requires for completion.

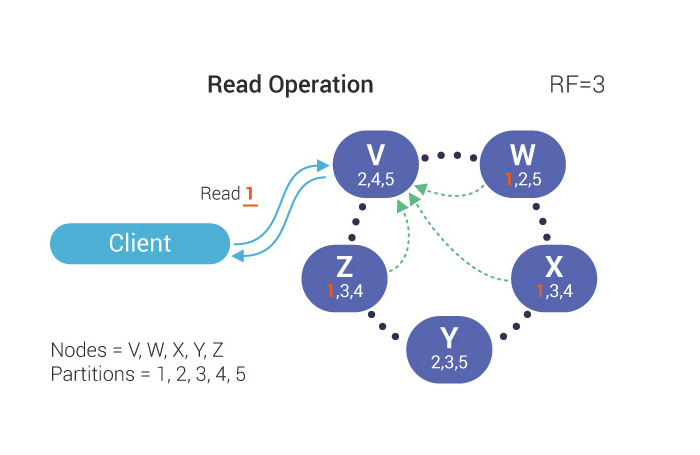

The replication factor and consistency level play important roles in making ScyllaDB highly available. The Replication Factor (RF) is equivalent to the number of nodes where data (rows and partitions) are replicated. Data is replicated to multiple (RF=N) nodes. An RF of 1 means there is only one copy of a row in a cluster and there is no way to recover the data if the node is compromised or goes down. RF=2 means that there are two copies of a row in a cluster. An RF of at least 3 is used in most systems or similar.

Data is always replicated automatically. Read or write operations can occur to data stored on any of the replicated nodes.

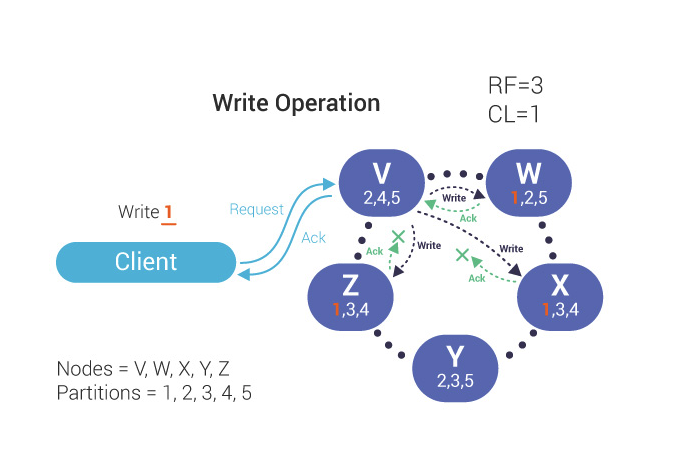

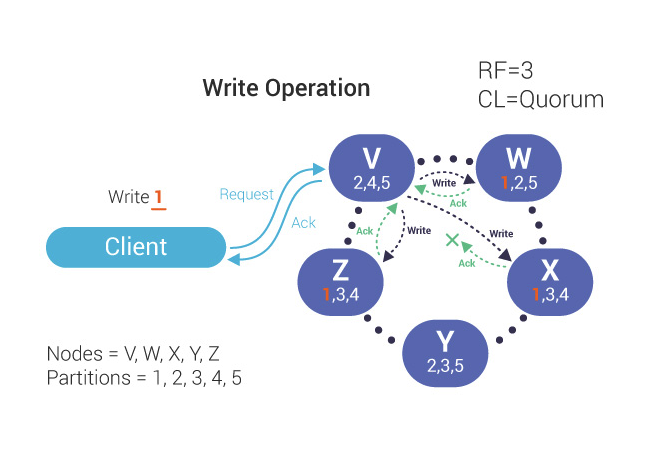

Our client sends a request to write partition 1 to node V; 1’s data is replicated to nodes W, X, and Z. We have a Replication Factor (RF) of 3. In this drawing, V is a coordinator node but not a replicator node. However, replicator nodes can also be coordinator nodes, and often are.

During a read operation, the client sends a request to the coordinator. Effectively because the RF=3, 3 nodes respond to the read request.

The Consistency Level (CL) determines how many replicas in a cluster must acknowledge read or write operations before it is considered successful.

Some of the most common Consistency Levels used are:

- ANY – A write is written to at least one node in the cluster. Provides the highest availability with the lowest consistency.

- QUORUM – When a majority of the replicas respond, the request is honored. If RF=3, then 2 replicas respond. QUORUM can be calculated using the formula (n/2 +1) where n is the Replication Factor.

- ONE – If one replica responds, the request is honored.

- LOCAL_ONE – At least one replica in the local data center responds.

- LOCAL_QUORUM – A quorum of replicas in the local datacenter responds.

- EACH_QUORUM – (unsupported for reads) – A quorum of replicas in ALL data centers must be written to.

- ALL – A write must be written to all replicas in the cluster, a read waits for a response from all replicas. Provides the lowest availability with the highest consistency.

During a write operation, the coordinator communicates with the replicas (the number of which depends on the Consistency Level and Replication Factor). The write is successful when the specified number of replicas confirm the write.

In the above diagram, the double arrows indicate the write operation request going into the coordinator from the client and the acknowledgment being returned. Since the Consistency Level is one, the coordinator, V, must wait for the write to be sent to and responded by only a single node in the cluster, which is, in this case, W.

Since RF=3, our partition 1 is also written to nodes X and Z, but the coordinator does not need to wait for a response from them to confirm a successful write operation. In practice, acknowledgments from nodes X and Z can arrive to the coordinator at a later time, after the coordinator acknowledges the client.

When our Consistency Level is set to QUORUM, the coordinator must wait for a majority of nodes to acknowledge the write before it is considered successful. Since our Replication Factor is 3, we must wait for 2 acknowledgments (the third acknowledgment does not need to be returned):

During a read operation, the coordinator communicates with just enough replicas to guarantee that the required Consistency Level is met. Data is then returned to the client.

The Consistency Level is tunable per operation in CQL. This is known as tunable consistency. Sometimes response latency is more important, making it necessary to adjust settings on a per-query or operation level to override keyspace or even data center-wide consistency settings. In other words, the Consistency Level setting allows you to choose a point in the consistency vs. latency tradeoff.

The Consistency Level and Replication Factor both impact performance. The lower the Consistency Level and/or Replication Factor, the faster the read or write operation. However, there will be less fault tolerance if a node goes down. The Consistency Level itself impacts availability. A higher Consistency Level (more nodes required to be online) means less availability with less tolerance to tolerate node failures. A lower Consistency Level means more availability and more fault tolerance.

In this post, we went over ScyllaDB’s highly-available architecture and explained fault tolerance. For more information on these topics, I encourage you to read our architecture and fault tolerance documentation.

Next Steps

- ScyllaDB Summit 2018 is around the corner. Register now!

- Learn more about ScyllaDB from our product page.

- See what our users are saying about ScyllaDB.

- Download ScyllaDB. Check out our download page to run ScyllaDB on AWS, install it locally in a Virtual Machine, or run it in Docker.