Cassandra Data Migration FAQs

What is the Apache Cassandra Database?

Apache Cassandra is a distributed, open-source database management system (DBMS). Cassandra is a wide column store NoSQL database designed to manage massive amounts of data across many servers. This category also includes databases such as ScyllaDB, Google BigTable, and Apache HBase.

It communicates using the Cassandra query language (CQL). Composite keys, Cassandra partition keys, and clustering keys system comprise the tables that form the system.

By eliminating single points of failure, the distributed structure enables the system to achieve scalability and high availability for mission-critical data.

As far as the CAP Theorem goes, Cassandra is described as an “AP” system, meaning it ensures data availability at the cost of consistency. However, Cassandra is also configurable, meaning the consistency level can be tuned based on the use case.

When Do Teams Perform Cassandra Data Migrations

Teams commonly perform Cassandra data migrations when:

- Upgrading to a new version of Cassandra

- Changing Cassandra deployment models

- Adopting a new Cassandra or Cassandra-compatible database, such as ScyllaDB

Here are some examples of real-world Cassandra migration:

- Discord: Migrated trillions of messages for their messaging platform

- Tripadvisor: Migrated 425K ops with 1-3 ms P99 latencies for real-time personalization.

- Rakuten: Migrated their catalog with 700M+ items and 250M items processed per day

- Expedia: Migrated the database for geography-based recommendations

Approaches to Cassandra Data Migration

As you can see in the above examples, there are several approaches to Cassandra data migration.

Offline Cassandra data migration. An offline approach takes the existing system offline completely while the team builds the migration.

The benefit to this approach is that it is the safest and simplest method that presents the lowest chance of data loss. The drawback to this approach is that the system is offline, causing significant downtime.

The offline Cassandra data migration workflow is:

- Creating the same schema in the new database (some minor variation should be fine).

- Taking a snapshot of all to-be-migrated data from Apache Cassandra.

- Loading the SSTable files to the new database + data validation.

- Legacy Apache Cassandra End Of Life: Use only the new database for reads and writes.

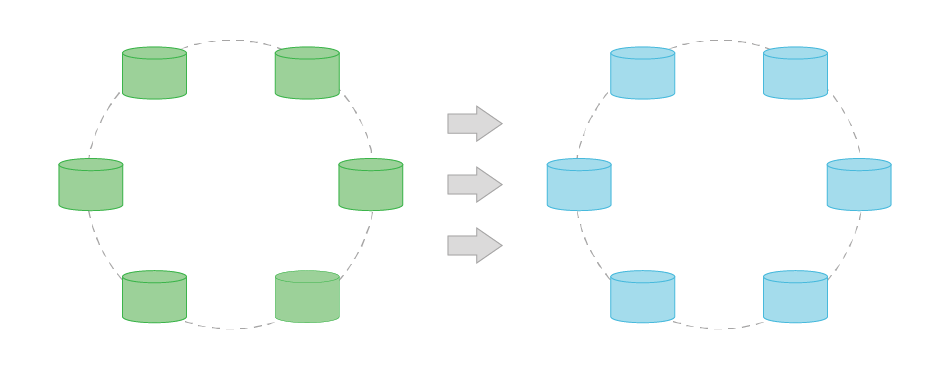

Live migration (Zero downtime) Cassandra data migration with shadow writes. This approach requires running both the old function and a new function in parallel to achieve zero downtime. It demands ample testing time to ensure the new function is entirely healthy before shutting down the old version.

There are two additional steps to migrate data in this approach:

- Creating the same schema in the new database (some minor variation should be fine).

- Configuring your application/s to perform dual writes (still reading only from the original Apache Cassandra).

- Taking a snapshot of all to-be-migrated data from Apache Cassandra.

- Loading the SSTable files to the new database + data validation.

- Verification period: dual writes and reads, the new database serves reads. Logging mismatches, until a minimal data mismatch threshold is reached.

- Legacy Apache Cassandra End Of Life: Use only the new database for reads and writes.

Data Migration From RDBMS to Cassandra

There are several steps to data migration from RDBMS to Cassandra or Cassandra-compatible databases: adapting the data model and application, planning the deployment, and moving the data.Adapting the data model is often the greatest challenge. Cassandra data modeling and relational data modeling are different. Although Cassandra Query Language (CQL) is similar to SQL and Cassandra uses familiar concepts including rows, tables, and columns, there are important differences to be aware of.

A relational database management system (RDBMS) stores data in a table with rows that all span a number of columns. If one row needs an additional column, that column must be added to the entire table, with null or default values provided for all the other rows. If you need to query that RDBMS table for a value that isn’t indexed, the table scan to locate those values will be very slow.

Wide-column NoSQL databases still have the concept of rows, but reading or writing a row of data consists of reading or writing the individual columns. A column is only written if there’s a data element for it. Each data element can be referenced by the row key, but querying for a value is optimized like querying an index in a RDBMS, rather than a slow table scan.

Learn essential strategies for wide column data modeling with Apache Cassandra and ScyllaDB

For more details on the differences between SQL and NoSQL, see this NoSQL Guide.

Does ScyllaDB Offer a Cassandra Data Migration Tool?

Yes, since so many teams migrate from Cassandra to ScyllaDB, the ScyllaDB community has developed a specialized migrator tool to simplify the Cassandra migration process.

The ScyllaDB Migrator project is a Spark-based application that does one simple task: it reads data from a table on a live Cassandra instance and writes it to ScyllaDB. It does so by running a full scan on the source table in parallel; the source table is divided to partitions, and each task in the Spark stage copies a partition to the destination table.

By using Spark to copy the data from Cassandra, it provides the ability to distribute the data transfer between several processes on different machines, leading to much improved performance. The Cassandra migrator also includes several other features, including:

- It is highly resilient to failures, and will retry reads and writes throughout the job;

- It will continuously write savepoint files that describe which token ranges have already been transferred: should the Spark job be stopped, these savepoint files can be used to resume the transfer from the point at which it stopped;

- It can be configured to preserve the WRITETIME and TTL attributes of the fields that are copied;

- It can handle simple column renames as part of the transfer (and can be extended to handle more complex transformations).

To learn more:

- Read the blog Simplifying Cassandra and DynamoDB Migrations with the ScyllaDB Migrator

- Watch the NoSQL Migration Masterclass (free and on demand)

- Go to the ScyllaDB Migrator GitHub project