Where an RDBMS-pro’s intuition led him astray – and what he learned when our database performance expert helped him get up and running with ScyllaDB NoSQL

Recently, I was asked by the ScyllaDB team if I could document some of my learnings moving from relational to NoSQL databases.

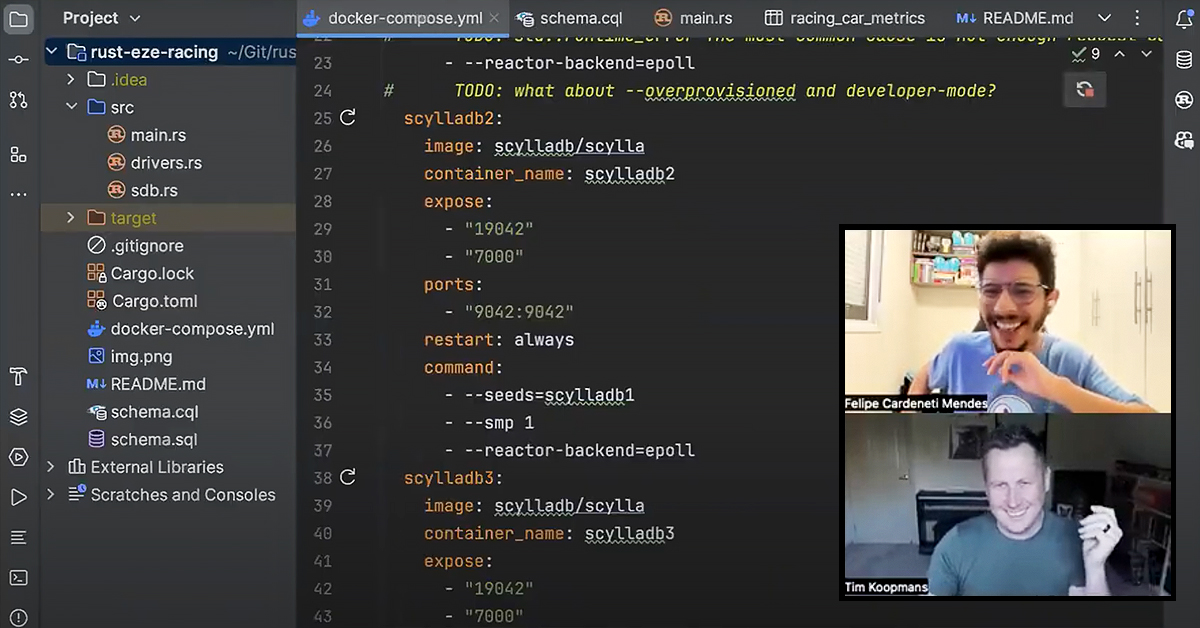

Of course, there are many (bad) habits to break along the way, and instead of just documenting these, I ended up planning a 3 part livestream series with my good friend Felipe from ScyllaDB.

ScyllaDB is a NoSQL database that is designed from the ground up with performance in mind. And by that, I mean optimizing for speed and throughput. I won’t go into the architecture details here, there’s a lot of material that you might find interesting if you want to read more in the resources section of this site.

Scaling your database is a nice problem to have, it’s a good indicator that your business is doing well. Many customers of ScyllaDB have been on that exact journey – some compelling event that perhaps has driven them to consider moving from one database or another, all with the goal of driving down latency and increasing throughput.

I’ve been through this journey myself, many times in the past, and I thought it would be great if I could re-learn, or perhaps just ditch some bad habits, by documenting the trials and tribulations of moving from a relational database to ScyllaDB. Even better if someone else benefits from these mistakes!

Fictional App, Real Scaling Challenges

So we start with a fictional application I have built. It’s the Rust-Eze Racing application which you can find on my personal GitHub here: https://github.com/timkoopmans/rust-eze-racing

If you have kids, or are just a fan of the Cars movies, you’re no doubt familiar with Rust-Eze, which is one of the Piston Cup teams. — and also, a sponsor of the famous Lightning McQueen… Anyway, I digress.

The purpose of this app is built around telemetry, a key factor in modern motor racing. It allows race engineers to interpret data that is captured from car systems so that they can tune for optimum performance. I created a simple Rust application that could simulate these metrics being written to the database, while at the same time having queries that read different metrics in real time. For the proof of concept, I used Postgres for data storage and was able to get decent read and write throughput from it on my development machine.

Since we’re still in the world of make believe and cars that can talk, I want you to imagine that my PoC was massively successful, and I have been hired to write the whole back end system for the Piston Cup. At this point in the scenario, with overwhelming demands from the business, I start to get nervous about my choice of database:

- Will it scale? What happens when I have millions or perhaps billions of rows?

- What happens when I add many more columns and rows with high cardinality to the database?

- How can I achieve the highest write throughput while maintaining predictable low latency?

All the usual questions a real full-stack developer might start to consider in a production scenario…

This leads us to the mistakes I made and the journey I went through, spiking ScyllaDB as my new database of choice.

Getting my development environment up and running, and connecting to the database for the first time

In my first 1:1 livestreamed session with Felipe, I got my development environment up and running, using docker-compose to set up my first ScyllaDB node. I was a bit over-ambitious and set up a 3-node cluster, since a lot of the training material references this as a minimum requirement for production. Felipe suggested it’s not really required for development and that one node is perfectly fine.

There are some good lessons in there, though…

I got a bit confused with port mapping and the way that works in general. In Docker, you can map a published port on the host to a port in the container – so I naturally thought: Let’s map each of the container’s 9042 ports to a unique number on the host to facilitate client communication. I did something like this:

ports:

- "9041:9042"And I changed the port number for 3 nodes such that 9041 was node 1, 9042 was node 2 and 9043 was node 3. Then in my Rust code, I did something like this:

SessionBuilder::new()

.known_nodes(vec!["0.0.0.0:9041", "0.0.0.0:9042", "0.0.0.0:9043"])

.build()

.awaitI did this thinking that the client would then know how to reach each of the nodes. As it turns out, that’s not quite true, depending on what system you’re working on. On my Linux machine, there didn’t seem to be any problem with this, but on macOS there are problems. Docker runs differently on macOS than Linux – Docker uses the Linux kernel, so these routes would always work, but macOS doesn’t have a Linux Kernel, so Docker has to run in a Linux virtual machine. When the client app connects to a node in ScyllaDB, part of the discovery logic in the driver is to ask for other nodes it can communicate with (read more on shard aware drivers here). Since ScyllaDB will advertise other nodes on 9042 ,they simply won’t be reachable. So on Linux, it looks like it established TCP comms with three nodes:

❯ netstat -an | grep 9042 | grep 172.19

tcp 0 0 172.19.0.1:52272 172.19.0.2:9042 ESTABLISHED

tcp 0 0 172.19.0.1:36170 172.19.0.3:9042 ESTABLISHED

tcp 0 0 172.19.0.1:40718 172.19.0.4:9042 ESTABLISHEDBut on macOS it looks a little different, with only one of the nodes with established TCP comms and the others stuck in SYN_SENT.

The short of it is, you don’t really need to do this in development if you’re just using a single node! I was able to simplify my docker-compose file and avoid this problem. In reality, production nodes would most likely be on separate hosts/pods, so no need to map ports anyway. The nice thing about running with one node instead of three is that you’ll also avoid this type of problem, depending on which platform you’re using:

std::runtime_error The most common cause is not enough request capacity in /proc/sys/fs/aio-max-nrI was able to circumvent this issue by using this flag:

--reactor-backend=epollThis option switches Seastar threads to use epoll for event polling, as opposed to the default linux-aio implementation. This may be necessary for development workstations (in particular Mac OS deployments) where increasing the value for fs.aio-max-nr on the host system may not turn out to be so easy. Note that linux-aio (the default) is still the recommended option for production deployments.

Another mistake I made was using this argument:

--overprovisionedAs it turns out, this argument needs a value, e.g. 1 to set this flag. However, it’s not necessary since this argument and the following are already set when you’re using ScyllaDB in docker:

--developer-modeThe refactored code looks something like this:

scylladb1:

image: scylladb/scylla

container_name: scylladb1

expose:

- "19042"

- "7000"

ports:

- "9042:9042"

restart: always

command:

- --smp 1

- --reactor-backend=epollThe only additional argument worth using in development is the --smp command line option to restrict ScyllaDB to a specific number of CPUs. You can read more about that argument and other recommendations when using Docker to run ScyllaDB here.

As I write all this out, I think to myself: This seems like pretty basic advice. But you will see from our conversation that these learnings are pretty typical for a developer new to ScyllaDB. So hopefully, you can take something away from this and avoid making the same mistakes as me.

Watch the First Session On Demand

The Next Step: NoSQL Data Modeling

In the second session with Felipe, we went deeper into my first queries using ScyllaDB and getting the app up and running for the PoC. You can watch this on demand at Developer Data Modeling Mistakes: From Postgres to NoSQL.

Watch the Second Session On Demand

Next Up: Optimizing NoSQL Performance Through Observability

I still have lots to unlearn, apparently. 😉 In the next session with Felipe, we’ll focus on using monitoring and performance tuning to discover and correct mistakes that commonly occur when developers move from SQL to NoSQL. Join us as May 23 we work through it together. There will be lots to learn — and probably also a fair share of Aussie/Brazil jokes too.

Join Us at the Next Livestream

We’re planning to cover topics such as:

- Common issues getting up and running with the monitoring stack

- Using the CQL optimizations dashboard

- Common issues causing high latency in a node

- Common issues causing replica imbalance

- What a healthy system looks like in terms of memory

- Key metrics to keep an eye on

Hope to see you there!