The Internet is not just connecting people around the world. Through the Internet of Things (IoT), it is also connecting humans to the machines all around us and directly connecting machines to other machines. In this blog post we’ll share an emerging machine-to-machine (M2M) architecture pattern in which MQTT, Apache Kafka and ScyllaDB all work together to provide an end-to-end IoT solution. We’ll also provide demo code so you can try it out for yourself.

IoT Scale

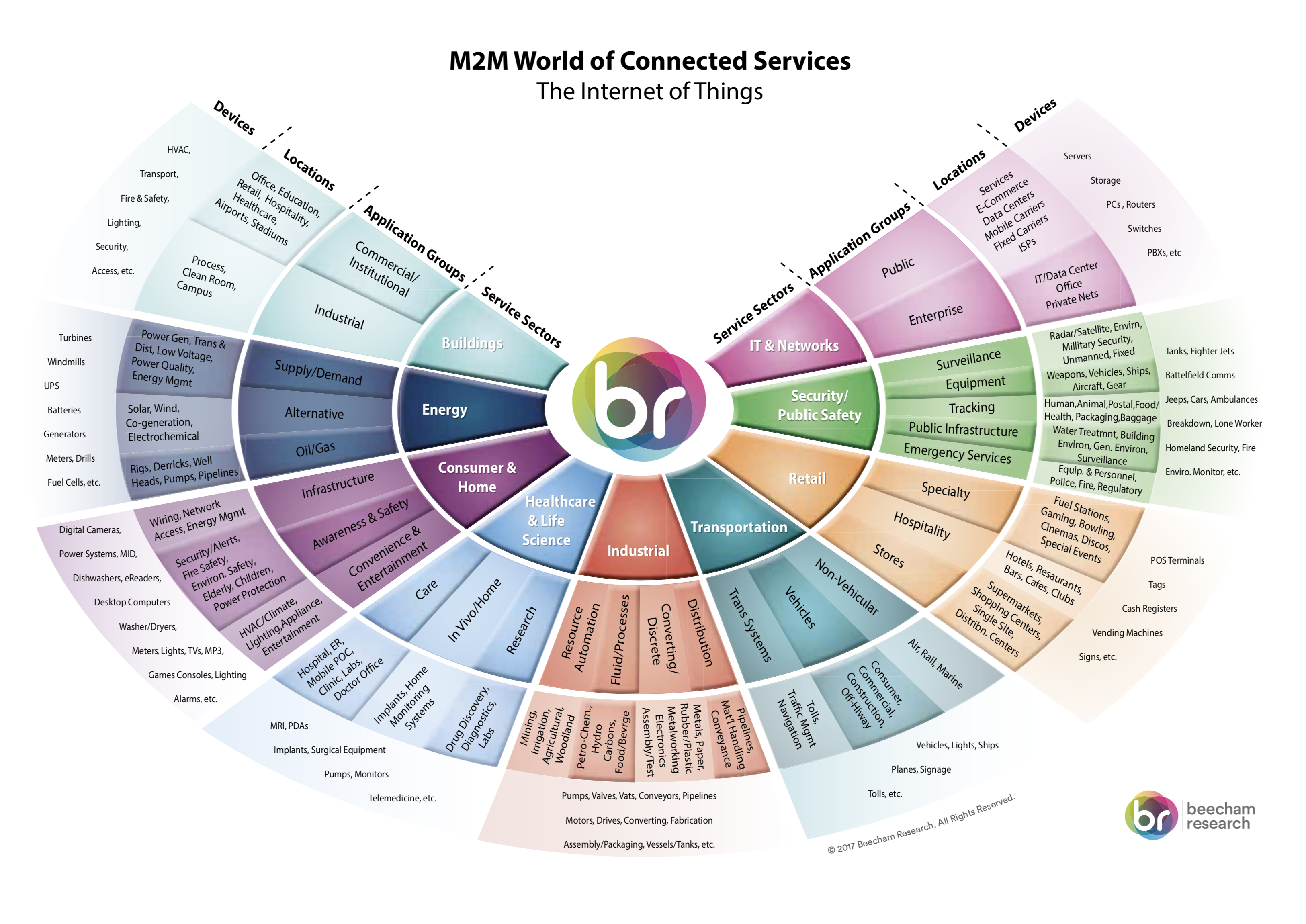

IoT is a fast-growing market, already known to be over $1.2 trillion in 2017 and anticipated to grow to over $6.5 trillion by 2024. The explosive number of devices generating, tracking, and sharing data across a variety of networks is overwhelming to most data management solutions. With more than 25 billion connected devices in 2018 and internet penetration increasing at a staggering 1066% since 2000, the opportunity in the IOT market is significant.

There’s a wide variety of IoT applications, like data center and physical plant monitoring, manufacturing (a multibillion-dollar sub-category known as Industrial IoT, or IIoT), smart meters, smart homes, security monitoring systems and public safety, emergency services, smart buildings (both commercial and industrial), healthcare, logistics & cargo tracking, retail, self-driving cars, ride sharing, navigation and transport, gaming and entertainment… the list goes on.

A significant dependency for this growth is the overall reliability and scalability of IoT deployments. As Internet of Things projects go from concepts to reality, one of the biggest challenges is how the data created by devices will flow through the system. How many devices will create information? What protocols do the devices use to communicate? How will they send that information back? Will you need to capture that data in real time, or in batches? What role will analytics play in the future? What follows is an example of such a system, using existing best-in-class technologies.

An End-to-End Architecture for the Internet of Things

IOT-based applications (both B2C and B2B) are typically built in the cloud as microservices with similar characteristics. It is helpful to think about the data created by the devices and the applications in three stages:

- Stage one is the initial creation — where data is created on the device and then sent over the network.

- Stage two is how the central system collects and organizes that data.

- Stage three is the ongoing use of that data stored in a persistent storage system.

Typically, when sensors/smart-devices get actuated they create data. This information can then be sent over the network back to the central application. At this point, one must decide which standard the data will be created in and how it will be sent over the network.

One widely used protocol for delivering this data is the Message Queuing Telemetry Transport (MQTT) protocol. MQTT is a lightweight messaging protocol for pub-sub communication typically used for M2M communication. Apache Kafka® is not a replacement to MQTT, but since MQTT is not built for high scalability, longer storage or easy integration to legacy systems, it complements Apache Kafka well.

In an IoT solution, devices can be classified into sensors and actuators. Sensors generate data points while actuators are mechanical components that may be controlled through commands. For example, the ambient lighting in a room may be used to adjust the brightness of an LED bulb and MQTT is the protocol optimized for sensor networks and M2M. Since MQTT is designed for low-power and coin-cell-operated devices, it cannot handle the ingestion of massive datasets.

On the other hand, Apache Kafka may deal with high-velocity data ingestion but not with M2M. Scalable IoT solutions use MQTT as an explicit device communication while relying on Apache Kafka for ingesting sensor data. It is also possible to bridge Kafka and MQTT for ingestion. It is recommended to keep them separate by configuring the devices or gateways as Kafka producers while still participating in the M2M network managed by an MQTT broker.

At stage two, data typically lands as streams in Kafka and is arranged in the corresponding topics that various IoT applications consume for real-time decision making. Various options like KSQL and Single Message Transforms (SMT) are available at this stage.

At stage three this data, which typically has a shelf life, is streamed into a long-term store like ScyllaDB using the Kafka Connect framework. A scalable, distributed, peer-to-peer NoSQL database, ScyllaDB is a perfect fit for consuming the variety, velocity and volume of data (often time-series) coming directly from users, devices and sensors spread across geographic locations.

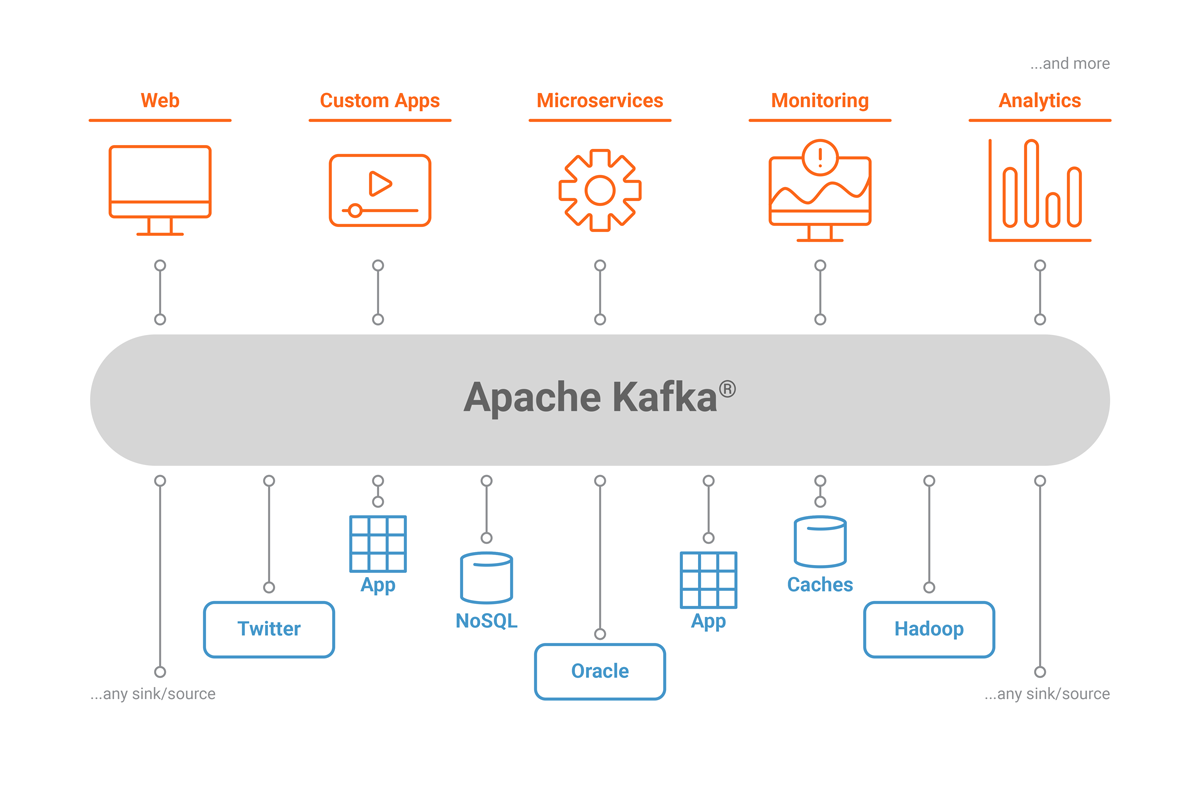

What is Apache Kafka?

Apache Kafka is an open source distributed message queuing and streaming platform capable of handling a high volume and velocity of events. Since being created and open sourced by LinkedIn in 2011, Kafka has quickly evolved from a message queuing system to a full-fledged streaming platform.

Enterprises typically accumulate large amounts of data over time from different sources and data types such as IoT devices and microservices applications. Traditionally, for businesses to derive insights from this data they used data warehousing strategies to perform Extract, Transform, Load (ETL) operations that are batch-driven and run at a specific cadence. This leads to an unmanageable situation as custom scripts move data from their sources to destinations as one-offs. It also creates many single points of failure and does not permit analysis of the data in real time.

Kafka provides a platform that can arrange all these messages by topics and streams. Kafka is enterprise ready and has features like high availability (HA) and replication on commodity hardware. Kafka decouples the impedance mismatch between the sources and the downstream systems that need to perform business-driven actions on the data.

What is ScyllaDB?

ScyllaDB is a scalable, distributed, peer-to-peer NoSQL database. It is a drop-in replacement for Apache Cassandra™ that delivers as much as 10X better throughput and more consistent low latencies. It also provides better cluster resource utilization while building upon the existing Apache Cassandra ecosystem and APIs.

Most microservices developed in the cloud prefer to have a distributed database native to the cloud that can linearly scale. ScyllaDB fits that use case well by harnessing modern multi-core/multi-CPU architecture, and producing low, predictable latency response times. ScyllaDB is written in C++, which results in significant improvements of TCO, ROI and an overall better user experience.

ScyllaDB is a perfect complement to Kafka because it leverages the best from Apache Cassandra in high availability, fault tolerance, and its rich ecosystem. Kafka is not an end data store itself, but a system to serve a number of downstream storage systems that depend on sources generating the data.

Demo of ScyllaDB and Confluent Integration

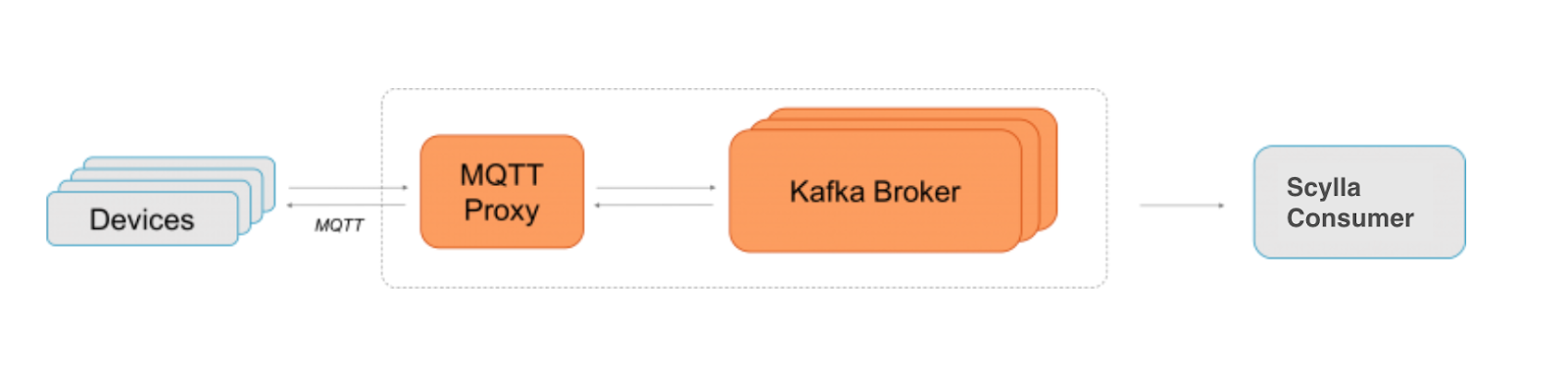

The goal of this demo is to demonstrate an end-to-end use case where sensors emit temperature and brightness readings to Kafka and the messages are then processed and stored in ScyllaDB. To demonstrate this, we are using Kafka MQTT proxy (part of the Confluent Enterprise package), which acts as a broker for all the sensors that are emitting the readings.

We also use the Kafka Connect Cassandra connector, which spins up the necessary consumers to stream the messages into ScyllaDB. ScyllaDB supports both the data format (SSTable) and all relevant external interfaces, which is why we can use the out of the box Kafka Connect Cassandra connector.

The load from various sensors is simulated as MQTT messages via the MQTT Client (Mosquitto), which will publish to the Kafka MQTT broker proxy. All the generated messages are then published to the corresponding topics and then a ScyllaDB consumer picks up the messages and stores them into ScyllaDB.

Steps

- Download Confluent Enterprise

- Once the tarball is downloaded – then:

- Set the $PATH variable

For the demo we choose to run the KAFKA Cluster locally but if we want to run this in production we would have to modify a few files to include the actual IP addresses of the cluster:

- Zookeeper – /etc/kafka/zookeeper.properties

- Kafka – /etc/kafka/server.properties

- Schema Registry – /etc/schema-registry/schema-registry.properties

- Now we need to start Kafka and Zookeeper This should start both zookeeper and Kafka. To do this manually you have to provide these two parameters

- Configure MQTT Proxy

Inside the directory

/etc/confluent-kafka-mqtt, there is akafka-mqtt-dev.propertiesfile that comes with the Confluent distribution. It lists all the available configuration options for MQTT Proxy. Modify these parameters: - Create Kafka topics

The simulated MQTT devices will be publishing to the topics temperature and brightness, so let’s create those topics in Kafka manually.

- Start the MQTT proxy

This is how we start the configured MQTT proxy

- Installing the Mosquitto framework

- Publish MQTT messages

We are going to be publishing messages with QoS2, that is the highest quality of service supported by MQTT protocol

- Verify messages in Kafka

Make sure that the messages are published into the kafka topic

- To produce a continuous feed of MQTT messages (optional)

Run this on the terminal

- Let’s start a scylla cluster and make it a kafka connect sink

Note: If you are choosing to use ScyllaDB in a different environment - then start from here https://www.scylladb.com/download/

Once the cluster comes up with 3 nodes then ssh into each node and uncomment the broadcast address in

/etc/scylla/scylla.yaml, change it to the public address of the node. If we are running the demo locally on a laptop or if we are running the Kafka connect framework in another Data Center compared to where the ScyllaDB cluster is running. - Let’s create a file

cassandra-sink.propertiesThis will enable us to start the connect framework with the necessary properties.

Add these lines to the properties file

- Next we need to download the binaries for the stream reactor

Now change the plugin.path property in

/confluent-5.0.0/etc/schema-registry/connect-avro-distributed.propertiesTo

ABSOLUTE_PATH/confluent-5.0.0/lib/stream-reactor-1.1.0-1.1.0/libs/ - Now let’s start the connect framework in distributed mode

- Get the ScyllaDB sink connector up and running:After you run the above command, then you should be able to see ScyllaDB as a Cassandra sink and any messages published using the instructions in step-9 will get written to scylla as a downstream system.

- Now, let’s try to run a script which can simulate the activity of a MQTT device - you can do this by cloning this repo https://github.com/mailmahee/MQTTKafkaConnectScyllaDB

And then running

This script simulates MQTT sensor activity and publish messages to the corresponding topics. Then the connect frameworks drains the messages from the topics into the corresponding tables in ScyllaDB.

You did it!

If you follow the instructions above, you should now be able to connect Kafka and ScyllaDB using the Connect framework. In addition, You should be able to generate MQTT workloads that publish the messages to the corresponding Kafka topics, which are then used for both real-time as well as batch analytics via ScyllaDB.

Given that applications in IoT are by and large based on streaming data, the alignment between MQTT, Kafka and ScyllaDB makes a great deal of sense. With the new ScyllaDB connector, application developers can easily build solutions that harness IoT-scale fleets of devices, as well as store the data from them in ScyllaDB tables for real-time as well as analytic use cases.

Many of ScyllaDB’s IoT customers like General Electric, Grab, Nauto and Meshify use ScyllaDB and Kafka as the backend for handling their application workloads. Whether a customer is rolling out an IoT deployment for commercial fleets, consumer vehicles, remote patient monitoring or a smart grid, our single-minded focus on the IoT market has led to scalable service offerings that are unmatched in cost efficiency, quality of service and reliability.

Try It Yourself

to use MQTT Proxy