Springtime is here! It’s time for our monthly update on Project Circe, our initiative to make ScyllaDB into an even more monstrous database. Monstrously more durable, stable, elastic, and performant. In March 2021 we released ScyllaDB Open Source 4.4. This new software release provides a number of features and capabilities that fall under the key improvement goals we set out for Project Circe. Let’s hone in on the recent performance and manageability improvements we’ve delivered.

New Scheduler

The Seastar I/O scheduler is used to maximize the requests throughput from all shards to the storage. Until now, the scheduler was running in a per-shard scope: each shard runs its own scheduler, balanced between its I/O tasks, like reads, updates and compactions. This works well when the workload between shards is approximately balanced; but when, as often happened, one shard was more loaded, it could not take more I/O, even if other shards were not using their share. I/O scheduler 2.0 included in ScyllaDB 4.4 fixes this. As storage bandwidth and IOPS are shared, each shard can use the whole disk if required.

Drivers in the Fast Lane

While we’ve been optimizing our server performance, we also know the other side of the connection needs to be able to keep up. So in recent months we have been polishing our existing drivers and releasing all-new, shard-aware drivers.

We recently updated our shard-aware drivers for Java and Go (GoCQL) to support Change Data Capture (CDC). This makes such data updates more easily consumable and highly performant. We are committed to adding CDC shard-awareness to all our supported drivers, such as our Python driver. Speaking of new drivers, have you checked out the new shard-aware C/C++ driver? Or how about the Rust driver we have in development? They’ll get the CDC update in due time too.

We also introduced new reference example of CDC consumer implementations:

You can use these examples when building a Go or Java base application feeding from a ScyllaDB CDC stream. Such an application can, for example, feed from a stream of IoT updates, updating the latest min and max value in an aggregation ScyllaDB table.

Manageability Improvements

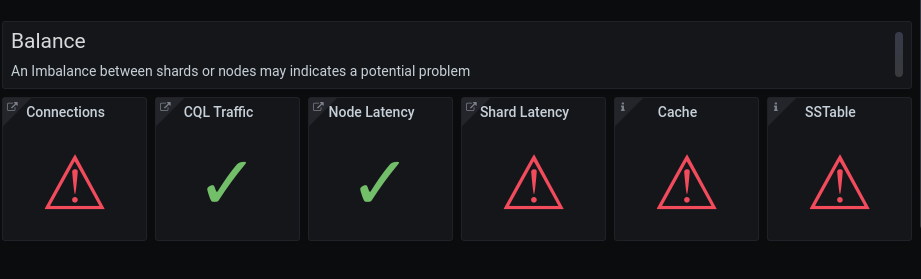

Advisor for ScyllaDB Monitoring Stack

We recently announced a ScyllaDB Monitoring Advisor section. For example, it can help you diagnose issues with balance between your nodes. Are you seeing unacceptable latencies from a particular shard? Low cache hit rate? Connection problems? ScyllaDB Advisor helps you pinpoint your exact performance bottlenecks so you can iron them out.

Look forward to additional capabilities and more in-depth documentation of this feature in ScyllaDB Monitoring Stack 3.7, which is right around the corner.

New API for ScyllaDB Manager

ScyllaDB Manager 2.3 adds a new suspend/resume API, allowing users to set a maintenance window in which no recurrent or ad-hoc task, like repair or backup, is running.

Additional Performance Optimizations

Improved performance is often the result of many smaller improvements. Here’s a list of ways we’ve recently further improved the performance of ScyllaDB with our 4.4 release:

- Repair works by having the repairing node (“repair master”) merge data from the other nodes (“repair followers”) and write the local differences to each node. Until now, the repair master calculated the difference with each follower independently and so wrote an SStable corresponding to each follower. This creates additional work for compaction, and is now eliminated as the repair master writes a single SStable containing all the data. #7525

- When aggregating (for example,

SELECT count(*) FROM tab), ScyllaDB internally fetches pages until it is able to compute a result (in this case, it will need to read the entire table). Previously, each page fetch had its own timeout, starting from the moment the page fetch was initiated. As a result, queries continued to be processed long after the client gave up on them, wasting CPU and I/O bandwidth. This was changed to have a single timeout for all internal page fetches for an aggregation query, limiting the amount of time it can be alive. #1175 - ScyllaDB maintains system tables that track any SSTables that have large cells, rows, or partitions for diagnostics purposes. When tracked SSTables were deleted, ScyllaDB deleted the records from the system tables. Unfortunately, this uses range tombstones, which are not (yet) well supported in ScyllaDB. A series was merged to reduce the number of range tombstones to reduce impact on performance. #7668

- Queries of Time Window Compaction Strategy tables now open SSTables in different windows as the query needs them, instead of all at once. This greatly reduces read amplification, as noted in the commit. #6418

- For some time now, ScyllaDB reshards (rearranges SSTables to contain data for one shard) on boot. This means we can stop considering multi-shard SSTables in compaction, as done here. This speeds up compaction a little. A similar change was done to cleanup compactions. #7748

- During bootstrap, decommission, compaction, and reshape ScyllaDB will separate data belonging to different windows (in Time Window Compaction Strategy) into different SSTables (to preserve the compaction strategy invariant). However, it did not do so for memtable flush, requiring a reshape if the node was restarted. It will now flush a single memtable into multiple SSTables, if needed. #4617

Reducing Latency Spikes

- Large SSTables with many small partitions often require large bloom filters. The allocation for the bloom filters has been changed to allocate the memory in steps, reducing the chance of a reactor stall. #6974

- The token_metadata structure describes how tokens are distributed across the cluster. Since each node has 256 tokens, this structure can grow quite large, and updating it can take time, causing reactor stalls and high latency. It is now updated by copying it in the background, performing the change, and swapping the new variant into the place of the old one atomically. #7220 #7313

- A cleanup compaction removes data that the node no longer owns (after adding another node, for example) from SSTables and cache. Computing the token ranges that need to be removed from cache could take a long time and stall, causing latency spikes. This is now fixed. #7674

- When deserializing values sent from the client, ScyllaDB will no longer allocate contiguous space for this work. This reduces allocator pressure and latency when dealing with megabyte-class values. #6138

- Continuing improvement of large blob support, we now no longer require contiguous memory when serializing values for the native transport, in response to client queries. Similarly, validating client bind parameters also no longer requires contiguous memory. #6138

- The memtable flush process was made more preemptible, reducing the probability of a reactor stall. #7885

Get ScyllaDB Open Source

Check out the release notes in full, and then head to our Download Center to get your own copy of ScyllaDB Open Source 4.4.