This post first appeared in Brandon’s Blog. Brandon Lamb is Application Developer Lead on a team at Starbucks, primarily focused on Inventory Management System and Master Location Data.

As part of a new side-project, I’ve been setting up some colocated servers instead of paying more for cloud. As part of this effort I need a database and thought it was time to explore a NoSQL option for it’s scalability, reliability and hopefully ease of replication, etc.

In parallel, the same effort is happening for work so great timing. Cassandra has been on our list as the database selection, but I happened to come across ScyllaDB. It looks pretty awesome.

Now fast forward through learning how to setup Docker Engine Swarm (1.12) and running into the issue of persistent containers. Long story short, I decided to just bite the bullet and consider the data layer as pets instead of cattle, and in doing so go with LXC containers, as they are targeted at being system containers vs application containers like Docker.

I am using Ubuntu 16.04 on my host machine, which is a Dell R610 (1U rackmount) with dual quad-core CPU, 48G RAM and a six-drive, RAID10 with SSDs.

To get started you need a few packages added:

apt-get install -y ifenslave inetutils-traceroute lxd lxd-tools lxd-client bridge-utils criu zfsutils-linux

I’m using a bridge so that my containers can talk to my network which is on a MikroTik cloud router switch on 10.1.x networks.

To configure the default LXC profile to use the bridge:

Configure networking

lxc profile device set default eth0 nictype bridged

lxc profile device set default eth0 parent br0

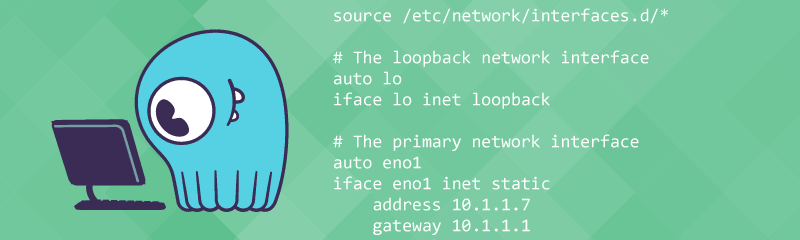

My /etc/network/interfaces looks like:

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eno1

iface eno1 inet static

address 10.1.1.7

gateway 10.1.1.1

netmask 255.255.255.0

dns-nameservers 10.1.1.1 8.8.8.8 8.8.4.4

auto br0

iface br0 inet static

bridge-ifaces eno2

bridge-ports eno2

bridge_stp off

bridge_fd 0

bridge_maxwait 0

address 10.1.2.7

gateway 10.1.2.1

netmask 255.255.255.0

auto eno2

iface eno2 inet manual

Essentially I am bridging my second NIC which is on my 10.1.2.x network, this is because I am using the primary NIC (10.1.1.x) for Docker.

Create hugepages LXC profile

Next up for ScyllaDB was creating an LXC profile for hugepages.

lxc profile create hugepages

lxc profile edit hugepages <<__END__

name: hugepages

config:

raw.lxc: |

lxc.mount.entry = hugetlbfs dev/hugepages hugetlbfs rw,relatime,create=dir 0 0

security.privileged: "true"

__END__

Something I am working through now is whether the mount entry is actually needed, or if just the security.privileged: "true" is what enables the use of hugepages.

Configure sysctl.conf for hugepages

On my host, I have also added the following to /etc/sysctl.conf:

vm.nr_hugepages = 256

I’m also not sure if this was supposed to be done within the container or the host system. I’ll have to play with settings back and forth to find out I guess.

Next up is applying both the default and hugepages LXC profiles to my container:

lxc profile apply db1-dev default,hugepages

Now if I check out the info for the container:

root@s4:~# lxc info db1-dev

Name: db1-dev

Remote: unix:/var/lib/lxd/unix.socket

Architecture: x86_64

Created: 2017/01/01 21:48 UTC

Status: Running

Type: persistent

Profiles: default, hugepages

Pid: 18272

Ips:

eth0: inet 10.1.2.252 vethTVF5E9

eth0: inet6 fe80::216:3eff:fefb:8dfa vethTVF5E9

lo: inet 127.0.0.1

lo: inet6 ::1

Resources:

Processes: 26

Disk usage:

root: 814.90MB

Memory usage:

Memory (current): 51.88MB

Memory (peak): 60.45MB

Network usage:

eth0:

Bytes received: 2.35MB

Bytes sent: 165.00kB

Packets received: 15527

Packets sent: 764

lo:

Bytes received: 36.11MB

Bytes sent: 36.11MB

Packets received: 27984

Packets sent: 27984

The default behavior of LXC is to not apply any resource limits to containers, so when launching a container it will appear to have all CPU cores and all RAM for the host available. We need to limit this so that the multiple instances of ScyllaDB are not battling for the same CPU and RAM.

Limit all three containers to 8GB RAM each

lxc config set db1-dev limits.memory 8GB

lxc config set db2-dev limits.memory 8GB

lxc config set db3-dev limits.memory 8GB

Pin all three containers to two, specific CPU cores

As my server has dual, quad-core CPU, with hyper-threading I have 16 cores available, so this will spread 4-cores each amongst the three containers.

lxc config set db1-dev limits.cpu 0,1,2,3

lxc config set db2-dev limits.cpu 4,5,6,7

lxc config set db3-dev limits.cpu 8,9,10,11

Copy ubuntu image

Since I will be running Ubuntu 16.04 for my containers as well, I need to pull down the image from the lxc hub (or whatever it is called).

This will create a new image called ubuntu-16.04 on my host system.

lxc image copy images:ubuntu/xenial local: --alias=ubuntu-16.04

root@s4:~# lxc image list

+--------------+--------------+--------+--------------------------------------+--------+---------+------------------------------+

| ALIAS | FINGERPRINT | PUBLIC | DESCRIPTION | ARCH | SIZE | UPLOAD DATE |

+--------------+--------------+--------+--------------------------------------+--------+---------+------------------------------+

| alpine-3.4 | a9f7883f1916 | no | Alpine 3.4 amd64 (20161231_17:50) | x86_64 | 2.63MB | Jan 1, 2017 at 10:44am (UTC) |

+--------------+--------------+--------+--------------------------------------+--------+---------+------------------------------+

| alpine-base | e5d018db8a03 | no | | x86_64 | 25.63MB | Jan 1, 2017 at 9:38pm (UTC) |

+--------------+--------------+--------+--------------------------------------+--------+---------+------------------------------+

| ubuntu-16.04 | 9eaf701ec247 | no | Ubuntu xenial amd64 (20170101_03:49) | x86_64 | 81.37MB | Jan 1, 2017 at 10:45am (UTC) |

+--------------+--------------+--------+--------------------------------------+--------+---------+------------------------------+

Launch new container

The Ubuntu image is now ready to use for creating new containers, launch the first calling it db1-dev.

lxc launch ubuntu-16.04 db1-dev

root@s4:~# lxc list

+-------------+---------+-------------------+------+------------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+-------------+---------+-------------------+------+------------+-----------+

| db1-dev | RUNNING | 10.1.2.252 (eth0) | | PERSISTENT | 0 |

+-------------+---------+-------------------+------+------------+-----------+

Copy ssh key

I’ve skipped some prep steps here, you basically need to exec bash on the container, run ssh-keygen -t rsa and make sure sshd is running, and I have also configured a static IP for the container. I may come back and edit this stuff in later.

Assuming you can ssh (via password) to the container, we now run this lxc file push command to add our public host key to the container so we can ssh without password. Ideally you would have configuration management before this point to handle all this stuff.

lxc file push ~/.ssh/id_rsa.pub db1-dev/root/.ssh/authorized_keys

Install ScyllaDB apt sources.list

wget -O /etc/apt/sources.list.d/scylla.list http://downloads.scylladb.com/deb/ubuntu/scylla-1.5-xenial.list

apt-get install scylla scylla-server scylla-tools uuid-runtime

I think you can actually just installed scylla as it is a virtual package, I am just going through my bash history to see what I did, I had apparently ran apt-get install scylla after just doing scylla-server and scylla-toolsseparately.

Edit /etc/default/scylla-server

Next up, we need to update the SCYLLA_ARGS to limit our CPU and RAM within the container itself.

SCYLLA_ARGS="--log-to-syslog 1 --log-to-stdout 0 --default-log-level info --collectd-address=127.0.0.1:25826 --collectd=1 --collectd-poll-period 3000 --network-stack posix --smp 4 --memory 6G --overprovisioned"

I am making the assumption that this is telling ScyllaDB to use 4 cores, and only 6G of the 8G assigned to the container. I’m not totally sure what the --overprovisioned does exactly, but was recommended by a reply on the mailing list as something to potentially try. As I am brand new and totally winging this, I’m just rolling with it for now.

Edit /etc/scylla/scylla.yaml

I think the last thing was to edit the scylla.yaml config and set the IP of the host from localhost/127.0.0.1 to 10.1.2.252.

One callout here is that there was one config option that I left as localhost, because it seems some script or the db itself talks to itself on localhost, and changing it to the host IP didnt work.

api_address: 127.0.0.1

Conclusion

As I am proof-reading this, I am realizing I am missing quite a bit of information and should break this into multiple blog posts, separating the configuration of LXD, including how to use ZFS, etc.