Kafka FAQs

What are Some Relevant Kafka Use Cases?

According to the Apache project’s web site, the original use case for Kafka stream processing was the replacement of a user activity tracking pipeline with a real-time, publish-subscribe feed.

Common use cases include:

Operational Monitoring: Kafka stream processing is often used for aggregating statistics from distributed applications to produce centralized feeds of operational data.

Log Aggregation: Kafka is often used to aggregate physical log files from servers and put them in a central location for processing. By abstracting the log details, Kafka provides a cleaner view of log or event data as a stream of messages.

Stream Processing: Kafka process data in processing pipelines consisting of multiple stages, where raw input data is consumed from Kafka topics and then aggregated, enriched, or otherwise transformed into new topics for further consumption or follow-up processing.

Event Sourcing: Event sourcing is a style of application design where state changes are logged as a time-ordered sequence of records. Kafka stream processing supports very large stored log data makes it an excellent backend for an application built in this style.

Commit Log: Kafka can serve as a kind of external commit-log for a distributed system. The log helps replicate data between nodes and acts as a re-syncing mechanism for failed nodes to restore their data.

How does Kafka Compare with Message Queuing Solutions?

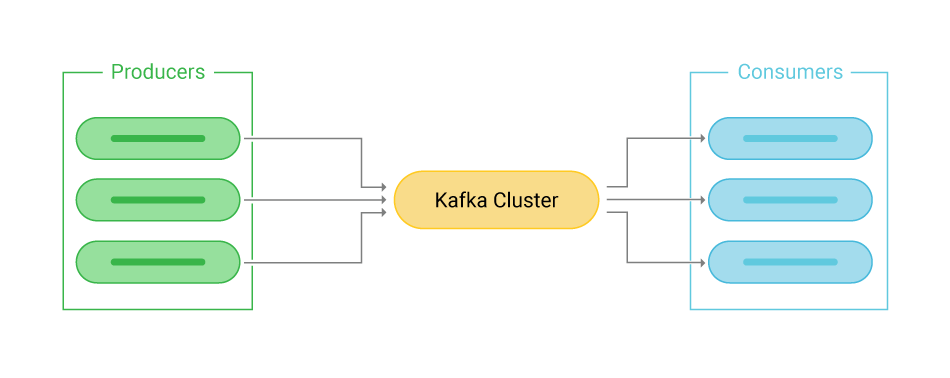

Kafka was created as an alternative to publish-subscribe (pub-sub) message queuing solutions such as IBM MQ, Azure Service Bus, and ActiveMQ. The pub-sub pattern enables IT systems to communicate in an asynchronous, decoupled manner. Traditional message queue systems relied on push-based communication, in which message producers push messages onto a queue from which they could be pulled by consumers. In contrast, Apache Kafka implements a pull-based communication, meaning that the receiving system requests a message from the producing system. This approach makes Apache Kafka faster and more scalable than traditional message queue systems.

AWS Kinesis vs. Kafka

Operating Kafka on-premises entails a range of activities, including managing Zookeeper, cluster sizing, scaling, capacity planning, applying security patches, to name only a few responsibilities. Many IT organizations seek to eliminate this operational overhead by adopting cloud-based event streaming platforms.

A number of cloud-based Kafka services are available for organizations that do not want to run Kafka on-premises. One is Amazon Web Services (AWS) Kafka. Kafka is also available as managed service offerings on all major cloud platforms via Confluent Cloud and others.

An alternative event streaming platform is Kinesis, a ‘serverless’ data streaming technology provided by AWS. As with serverless technologies, you don’t have to be concerned with hosting the software and the resources.

Apache Pulsar vs. Kafka

Apache Kafka and Apache Pulsar are competing technologies. As noted, Kafka was developed at LinkedIn; Pulsar was developed at Yahoo. While Kafka relies on a distributed log for storage, Apache Pulsar uses Apache BookKeeper.

Notably, Pulsar offers a Kafka-compatible API that enables developers to migrate from Kafka to Pulsar. Larger projects should consider that Pulsar has no enterprise version or commercial support, and is primarily community-led.

How to Use Kafka Connect and Its Common Uses

Kafka Connect is an open source Apache Kafka component that provides a reliable and scalable mechanism for moving data between Kafka and other data sources. Connectors are classified by the direction in which the data moves. A source connector reads data from a datasource and writes to Kafka topics. A sink connector reads data from a Kafka topic and writes to a datasource.

Community-developed Kafka connector plugins are available for PostgreSQL, MySQL, Cassandra, MongoDB, Redis, JDBC, FTP, MQTT, Couchbase, REST API, S3, and ElasticSearch. Kafka plugins provide the standardized implementation for moving the data from those datastores.

All current Kafka Connectors are available from Confluent.

Does ScyllaDB Offer Solutions for Kafka?

ScyllaDB’s high performance NoSQL database is a natural fit for Apache Kafka. Both ScyllaDB and Kafka support the massive scalability and high throughput required in modern event streaming data architectures.

As an API-compatible implementation of Apache Cassandra, ScyllaDB enables users to connect to Kafka by simply swapping out their Cassandra database with ScyllaDB transparently; no code changes are required.

ScyllaDB’s Kafka connector optimizes performance through shard-awareness. Kafka users who adopt ScyllaDB have flexible deployment options, including open source, enterprise-grade or cloud-hosted fully managed solutions for both technologies. Common, fully supported combinations include:

- ScyllaDB Open Source NoSQL database and open source Apache Kafka

- ScyllaDB Enterprise and the Confluent Platform

- ScyllaDB Cloud (NoSQL DBaaS) and Confluent Cloud managed cloud services

The Kafka ScyllaDB Connector provides the flexibility and performance needed to build the next generation of Big Data applications.

Download the ScyllaDB Kafka Connector

Read more about ScyllaDB and Kafka