Database Benchmark FAQs

Database Performance Benchmarks

The characteristics of database operations in the context of workload affect performance. Transaction processing also adds complexity to this problem. Generally, Create, Read, Update and Delete (CRUD) operations each have different workloads that significantly affect latency and throughput benchmark results.

A workload is a sequence of operations that defines access patterns and the variance of operations—processed by sequence or time—on a database. Workloads are based either on trace-based/real-world data or synthetic data, which systems create using load patterns and constraints from the real-world configured for the process.

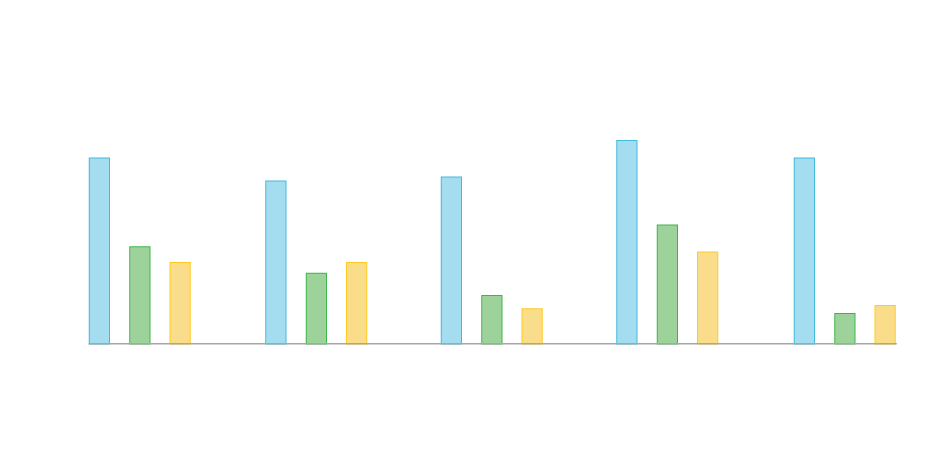

[See NoSQL and distributed SQL benchmarks]

Database Benchmark Comparisons

There are two primary types of benchmark: synthetic and “real world” benchmarks.

Synthetic benchmarks typically simulate a workload such as an OLTP workload or a standard benchmark as in TPC-C or TPC-H. Synthetic benchmarks usually simulate real-world workloads by attempting to follow the same patterns to determine how the existing database configuration and hardware work together under a given type of workload. Synthetic benchmarks can run anywhere, independent of schema design. However, synthetic benchmarks cannot replace real-world conditions.

Real-world or trace-based benchmarks use a data set and queries related to the application. It doesn’t necessarily require a full database benchmark dataset and complete query mix. The idea is to see and understand the precise interactions between the application, database configuration, and hardware, either some particular aspect or in general.

Depending on your requirements and use case, you might want to find and compare:

- NoSQL database benchmarks

- Relational database benchmarksIn memory database benchmarks

- Graph database benchmarks

- Big data databases benchmarks

Reasons for Database Benchmarking

There are multiple reasons for database benchmarking:

Selecting the right database. There are hundreds of industry-ready databases, many of them specialized for specific use cases. For each one, there are typically multiple suitable databases. Database benchmarking offers comparable metrics for each, serving as the foundation for quantitative and objective decisions about the best option for the cloud infrastructure, workload, and other parameters.

Selecting cloud resources. Choosing a database management system independent of a cloud provider can impact all non-functional properties such as availability, performance, and scalability. This is because database benchmark results can vary significantly between cloud providers. A database or workload may excel with one provider, but fail with another. While total prediction is impossible, database benchmarking can identify correlations and ideal matches. Database benchmarking offers the basis for an evidence-based decision on which cloud provider best suits your workload in terms of internal storage, VM type, performance-cost ratio, and other classic and virtual benchmarks.

Monitoring for changes in performance. Routine database benchmarking and both before and after version updates will detect performance differences and allow teams to tackle them proactively.

Capacity planning. As workload requirements fluctuate, the capabilities and limits of systems are often unknown. Stress testing identifies the current setup’s limits and capacity planning allows for improved safety and faster reaction times.

Tuning the system. Tuning and tweaking database setups regularly to work around bottlenecks, reduce existing problems, or mitigate foreseen problems is error-prone, time-consuming, and annoying—but far less so when you have the optimal settings in hand from database benchmarking.

How to Benchmark Database Performance: Database Benchmarking Techniques

Some important steps in the database benchmarking process include:

Select specific benchmarks. There are a number of standard benchmarks you can use. Consider what you want to accomplish, and create benchmarks to meet those goals. Benchmarks might help tune queries, assess stack scalability, determine hardware settings, tweak the configuration, or achieve other goals.

Make one change at a time. For example, making any one change to the hardware, operating system, configuration, or query mix can change the latency metrics. If you alter more than one element at once, there is no way to tell which one causes the change to the benchmark that you’re seeking.

Make multiple benchmark runs. This is the only way to slowly unravel the complexity of the effects you’re observing over time. This also helps account for unknowns that can temporarily affect performance and skew benchmark results. An average over time is far more accurate.

Understand database benchmarks. Queries per second (QPS) or throughput is not the most important metric, because it ignores latency. A P99 latency low result means the system is working how users expect and nearly all queries are returning quickly and in a stable, predictable way. This also helps ensure you’re using the right database benchmark tools and not misusing existing tools.

[More database benchmarking best practices]

Does ScyllaDB Provide Database Benchmarks?

Yes, the ScyllaDB Engineering team performs extensive benchmarking of its database.

Apache Cassandra Benchmarks

ScyllaDB’s Apache Cassandra benchmarks show that ScyllaDB has the fastest NoSQL database benchmarks against Cassandra in almost every area:

- Cassandra 4.0 has better P99 latency than Cassandra 3.11

- ScyllaDB has 2x-5x better throughput and much better latencies than Cassandra 4.0

- ScyllaDB adds and replaces nodes far faster than Cassandra 4.0

- ScyllaDB finishes compaction 32x faster than Cassandra 4.0

DynamoDB Benchmarks

ScyllaDB Cloud shows superior performance over Amazon’s DynamoDB and is significantly less expensive for similar workloads:

- 20x better throughput in the hot-partition test

- ScyllaDB Cloud is 1/7 the expense of DynamoDB when running equivalent workloads

- ScyllaDB Cloud: Average replication latency of 82ms. DynamoDB: Average latency of 370ms.

- ScyllaDB provides you freedom of choice with no cloud vendor lock-in

Bigtable and CockroachDB Benchmarks

ScyllaDB’s NoSQL database benchmarks also show it outperforming Google Cloud Bigtable and CockroachDB:

- ScyllaDB Cloud performs 26X better than Google Cloud Bigtable when applied with real-world, unoptimized data distribution

- Google BigTable requires 10X as many nodes to accept the same workload as ScyllaDB Cloud

- ScyllaDB Cloud was able to sustain 26x the throughput, and with read latencies 1/800th and write latencies less than 1/100th of Cloud Bigtable

- Loading 10x the data into ScyllaDB took less than half the time it took for CockroachDB to load the much lesser dataset.

- ScyllaDB handled 10x the amount of data.

- ScyllaDB achieved 9.3x the throughput of CockroachDB at 1/4th the latency.

Petabyte-Scale Benchmark

In a recent petabyte benchmark, ScyllaDB achieved single-digit millisecond P99 latency with 7M TPS – and that benchmarking did not involve the latest performance optimizations or powerful new EC2 instances.

More ScyllaDB Benchmarks

Learn more about ScyllaDB’s NoSQL database benchmarks.