In mid-2015, Intel and Micron jointly unveiled a new kind of non-volatile memory storage device named 3D XPoint (pronounced “cross-point”) that is 1000x faster than NAND. Now that 3D XPoint is generally available and has hit the broad market, we can start testing it. 3D XPoint uses electrical resistance and is considered to be bit addressable. It’s also worth mentioning that the endurance is much better with 3D XPoint because the stated wear leveling is 30 full drive writes per day for 5 years. 3D XPoint developers indicate that it is based on changes in resistance of the bulk material. Intel has stated that 3D XPoint does not use a phase-change or memristor technology, although this is disputed by independent reviewers. To understand how XPoint reads and writes bits, you will need to dive into phase change materials (PCM).

Source: Pcper.com

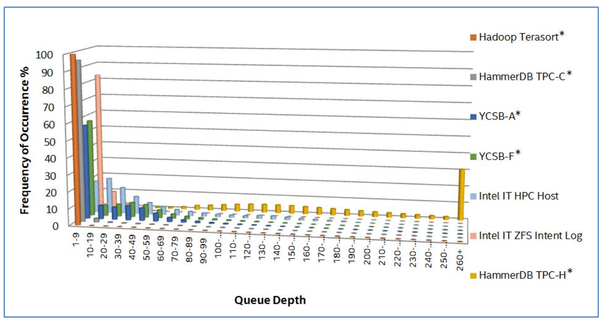

Today’s SSDs have reached such a high level of performance that they can serve requests extremely quickly which lowers the overall queue depth for the majority of use-cases. The graph above, provided by Intel, illustrates Intel’s own efforts to characterize the real-world load on an SSD for different applications. According to Intel’s workload examinations, the majority of workloads rarely stray into high Queue Depth territory. Even intense workloads tend to spike into high queue depth areas for only short periods and then quickly fall back into lower ranges. These spikes result from the outstanding I/O stacking up behind the storage device. If the device were faster and answered the requests sooner, it would eliminate most of the short spikes we see on the chart. The fact that most workloads don’t use high queue depth means that most benchmarks published by SSD vendors with high IOps counts are not valid for real-life application workloads, unlike Intel® Optane™.

A few months back, Intel unveiled the Optane SSD DC P4800X drive that is based on 3D XPoint technology for PCI Express or NVMe slots in servers. It is 375 GB in size, has extremely low latency (typically under 10µs), and 2GB/s throughput. This means that it can serve as either a memory cache or persistent storage. Intel® Optane™ drives offer unrivaled performance at low queue depth which means CPUs are more active and fully utilized. Intel® Optane™ SSDs for the data center lets users do more work with the same server with an outcome of lower TCO costs and greater expanding capabilities. Since the drive is extremely responsive under any load, it is highly predictable and delivers ultra-fast service, consistently.

Optane: Impressive Latency and Throughput Results

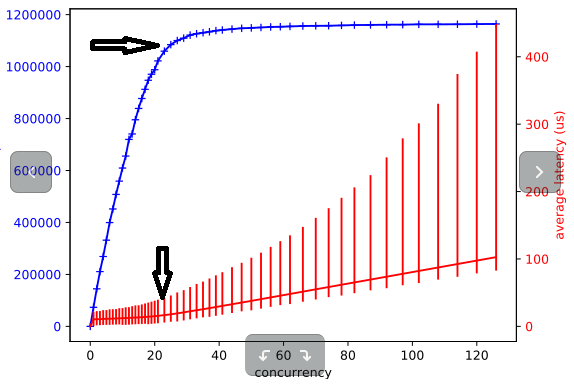

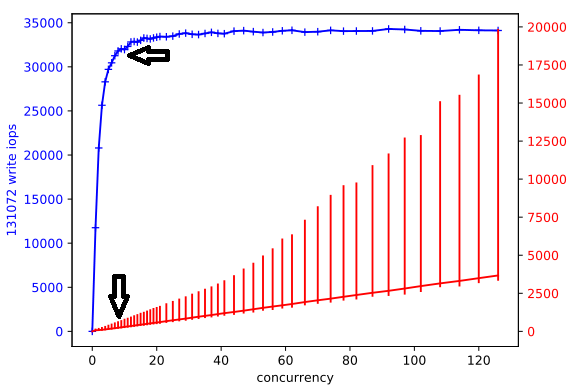

Our initial performance tests with Optane used Diskplorer to measure the drives capabilities. Diskplorer is a small wrapper around fio that is used to graph the relationship between concurrency (I/O depth), throughput, and IOps. Basically, its goal is to find the “knee” and the max effective parallelism. Concurrency is the number of parallel operations that a disk or array can sustain. With increasing concurrency, the latency increases and we observe diminishing IOps increases beyond an optimal point (A “knee” in the blue line on the graph below).

For this purpose we used the following server:

2 x 14 Core CPUs, 128GB DRAM, 2 x Intel® Optane™ SSD DC P4800X

CPU: Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz

Figure 1: fio RandRead test with a 4K buffer provided the following results:

Optimal concurrency is about 24

Throughput: 1.0M IOps of 4k = 4GB/s

Latency: 18µs

Figure 2: fio RandWrite test with 128KB buffer provide the following results:

Optimal concurrency is about 8

Throughput: 32K IOps

Latency: 237µs

Comparing to “Traditional” SSD diskplorer results

To clarify the performance results shown for the Optane drive, we added the results of a similar Diskplorer testing that we conducted on 8 NVMe based drives from Google Compute Engine (GCE). The image presents results for a fio RandRead test with a 4K buffer. While GCE performance results look impressive, they are not close to the results of the Optane drive in terms of throughput and latency.

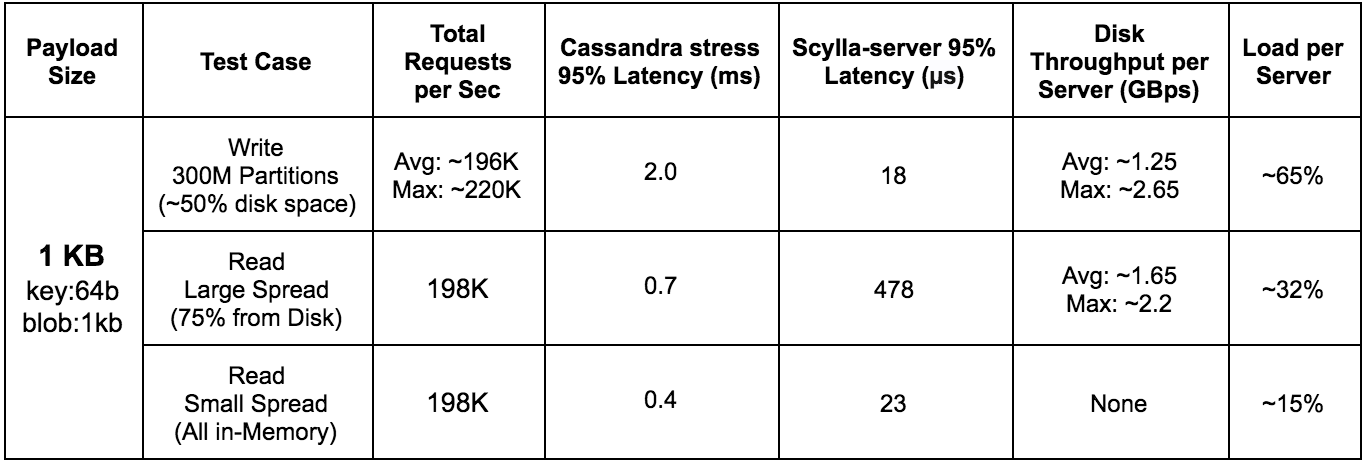

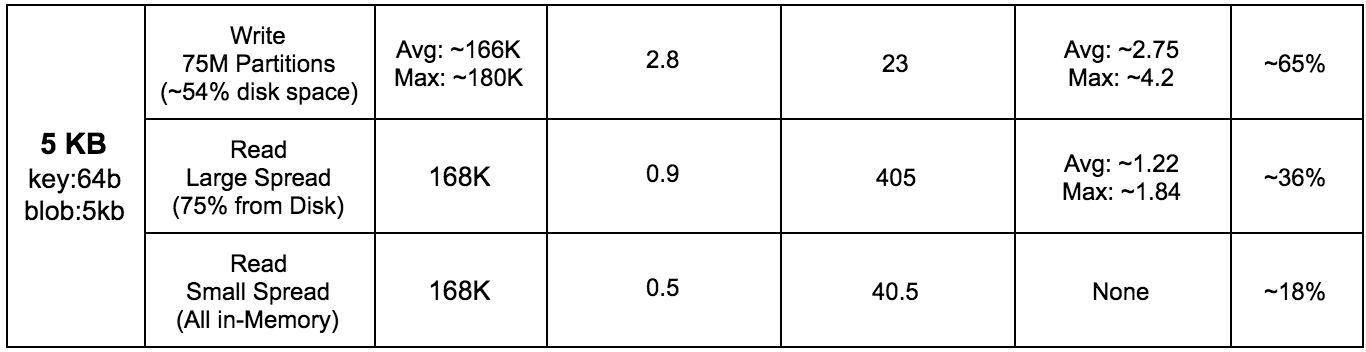

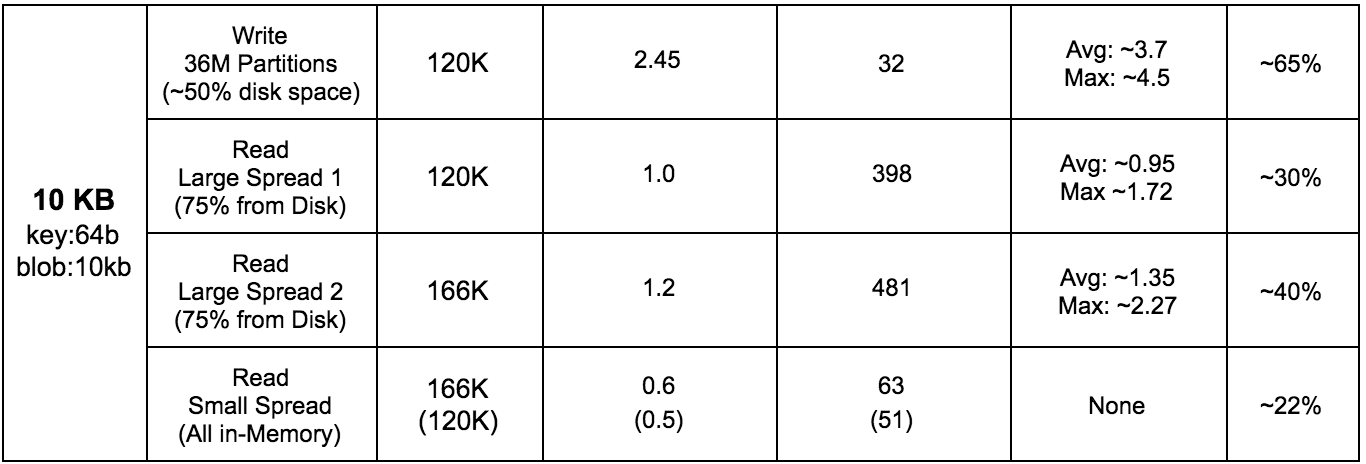

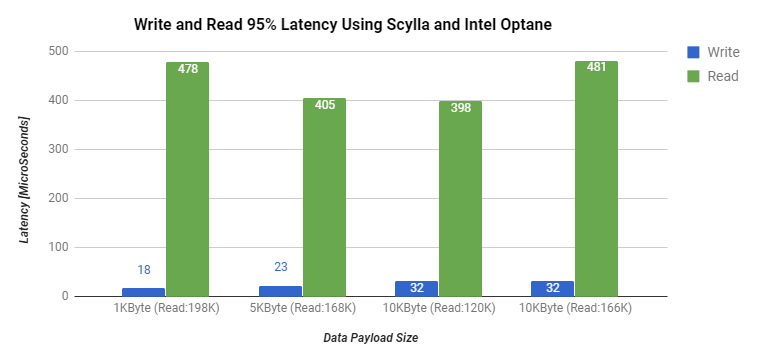

Optane: Disk Throughput and Latency Results for 1 / 5 / 10 KByte Workloads

To test the disk throughput and latency for various workloads, we used ScyllaDB as the database with user profile workloads and a simple K/V schema. For read tests, we executed two scenarios. The first scenario includes a data access pattern with a large working set much larger than the RAM capacity. This scenario will lower the probability of finding a read partition in ScyllaDB’s cache. The second scenario includes the case of a small working set that will create a higher probability of a partition being cached in ScyllaDB’s memory.

In all of the tests conducted, ScyllaDB utilizes all of the server’s RAM (128GB), replication factor set to 3 (RF=3), and the consistency level was set to one (CL=ONE). The read test duration is 10 minutes and the load is represented as an average percentage of CPU utilization on each server.

Server side latency measurements

Summary

Intel® Optane™ drives help servers to maximize their potential by delivering a drive that is extremely responsive under any load, has low latency, and helps the CPU’s on the server to be better utilized. Based on the testing done in this blog post, we can see that Optane workload latency numbers are almost as low as in-memory for reads while lowering the RAM costs and keeping it all consistent. Using faster drives can help users build persistency into their caching systems. With sub-millisecond read and write latencies, an application that requires near real-time access to data can overcome issues such as cold-cache warming and data resiliency by using technologies like 3D XPoint and Intel® Optane™ drives.

Conclusion: Optane is FAST and we want one.