ScyllaDB Architecture

Close-to-the-metal architecture handles millions of OPS with predictable single-digit millisecond latencies.

Learn MoreScyllaDB is purpose-built for data-intensive apps that require high throughput & predictable low latency.

Learn MoreLevel up your skills with our free NoSQL database courses.

Take a CourseOur blog keeps you up to date with recent news about the ScyllaDB NoSQL database and related technologies, success stories and developer how-tos.

Read More

ScyllaDB supports the same CQL commands and drivers and the same persistent SSTable storage format. It uses the same ring architecture and high availability model you’ve come to expect from Cassandra. Find out more

See key differencesUnlike Apache Cassandra, ScyllaDB was written in C++ using the Seastar framework, providing highly asynchronous operations and avoiding Garbage Collection (GC) stalls prevalent in Java. ScyllaDB offers unique features like Incremental Compaction and Workload Prioritization that extend its capabilities beyond Cassandra. Find out more

See key similaritiesScyllaDB supports the same JSON-style queries and same drivers. It runs over the same HTTP/HTTPS style connection. It can even simulate DynamoDB’s schemaless model via a clever use of maps. Find out more

See key differenceWith ScyllaDB you are not locked-in to one cloud provider. ScyllaDB can run on any cloud platform or on-premises. And if you want us to run ScyllaDB for you, ScyllaDB Cloud offers a fully managed database as a service (NoSQL DBaaS) solution. You can even run ScyllaDB Cloud on AWS Outposts. Find out more

See key similarities

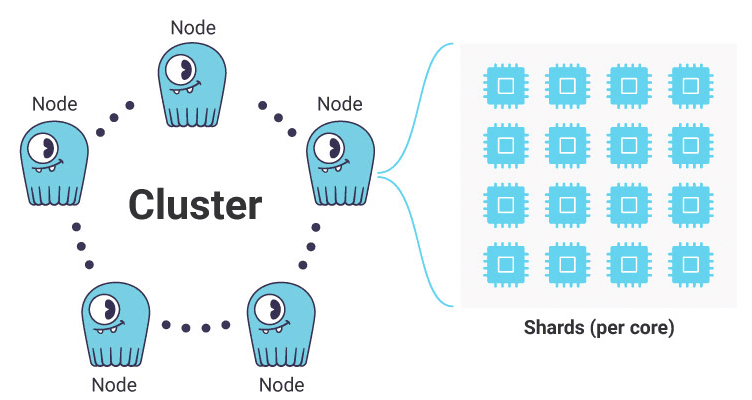

| Cluster | The first layer to be understood is a cluster, a collection of interconnected nodes organized into a virtual ring architecture across which data is distributed. All nodes are considered equal, in a peer-to-peer sense. Without a defined leader the cluster has no single point of failure. Clusters need not reside in a single location. Data can be distributed across a geographically dispersed cluster using multi-datacenter replication. |

| Node | These can be individual on-premises servers, or virtual servers (public cloud instances) comprised of a subset of hardware on a larger physical server. The data of the entire cluster is distributed as evenly as possible across those nodes. Further, ScyllaDB uses logical units, known as Virtual Nodes (vNodes), to better distribute data for more even performance. A cluster can store multiple copies of the same data on different nodes for reliability. |

| Shard | ScyllaDB divides data even further, creating shards by assigning a fragment of the total data in a node to a specific CPU, along with its associated memory (RAM) and persistent storage (such as NVMe SSD). Shards operate as mostly independently operating units, known as a “shared nothing” design. This greatly reduces contention and the need for expensive processing locks. |

| Keyspace | The top level container for data defines the replication strategy and replication factor (RF) for the data held within ScyllaDB. For example, users may wish to store two, three or even more copies of the same data to ensure that if one or more nodes are lost their data will remain safe. |

| Table | Within a keyspace data is stored in separate tables. Tables are two-dimensional data structures comprised of columns and rows. Unlike SQL RDBMS systems, tables in ScyllaDB are standalone; you cannot make a JOIN across tables. |

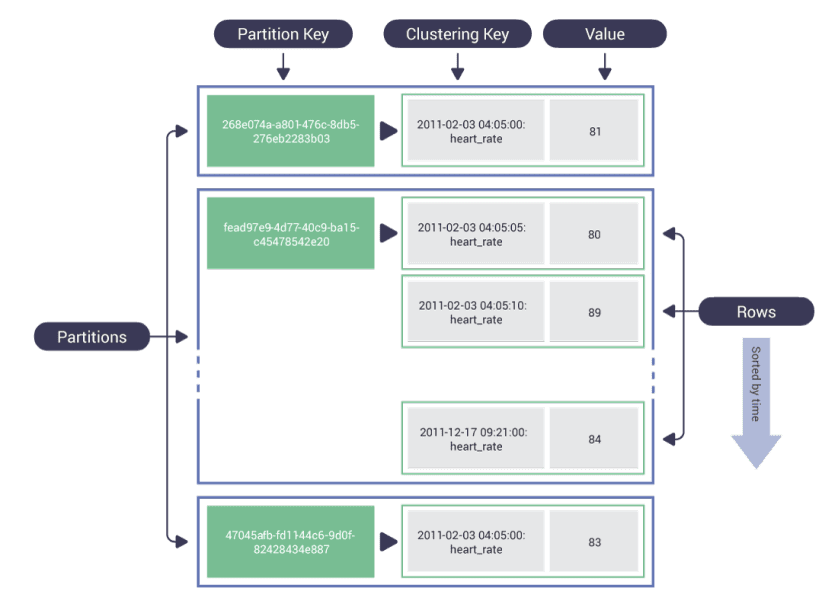

| Partition | Tables in ScyllaDB can be quite large, often measured in terabytes. Therefore tables are divided into smaller chunks, called partitions, to be distributed as evenly as possible across shards. |

| Rows | Each partition contains one or more rows of data sorted in a particular order. Not every column appears in each row. This makes ScyllaDB more efficient to store what is known as “sparse data.” |

| Columns | Data in a table row will be divided into columns. The specific row-and-column entry will be referred to as a cell. Some columns will be used to define how data will be indexed and sorted, known as partition and clustering keys. |

ScyllaDB includes methods to find particularly large partitions and large rows that can cause performance problems. ScyllaDB also caches the most frequently used rows in a memory-based cache, to avoid expensive disk-based lookups on so-called “hot partitions.”

Learn best practices for data modeling in ScyllaDB.

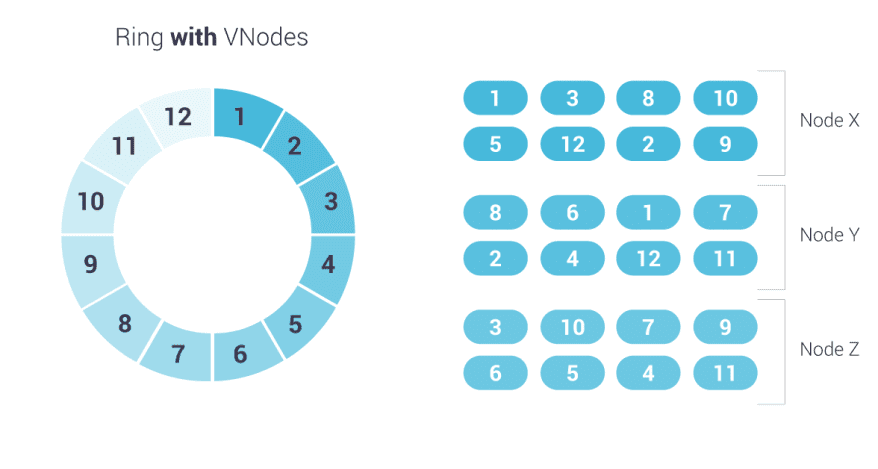

| Ring | All data in ScyllaDB can be visualized as a ring of token ranges, with each partition mapped to a single hashed token (though, conversely, a token can be associated with one or more partitions). These tokens are used to distribute data around the cluster, balancing it as evenly as possible across nodes and shards. |

| vNode | The ring is broken into vNodes (Virtual Nodes) comprising a range of tokens assigned to a physical node or shard. These vNodes are duplicated a number of times across the physical nodes based on the replication factor (RF) set for the keyspace. |

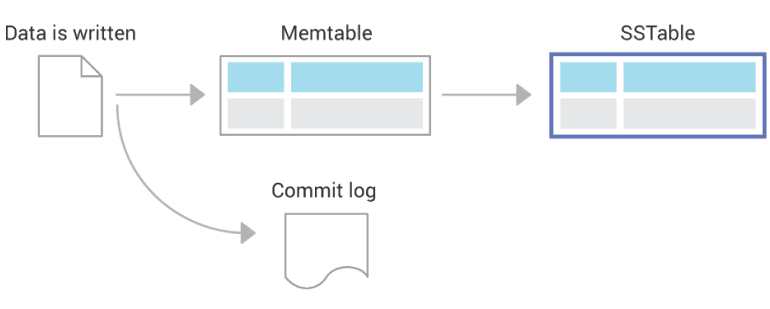

| Memtable | In ScyllaDB’s write path data is first put into a memtable, stored in RAM. In time this data is flushed to disk for persistence. |

| Commitlog | An append-only log of local node operations, written to simultaneously as data is sent to a memtable. This provides persistence (data durability) in case of a node shutdown; when a server restarts, the commitlog can be used to restore a memtable. |

| SSTables | Data is stored persistently in ScyllaDB on a per-shard basis using immutable (unchangeable, read-only) files called Sorted Strings Tables (SSTables), which use a Log-Structured Merge (LSM) tree format. Once data is flushed from a memtable to an SSTable, the memtable (and the associated commitlog segment) can be deleted. Updates to records are not written to the original SSTable, but recorded in a new SSTable. ScyllaDB has mechanisms to know which version of a specific record is the latest. |

| Tombstones | When a row is deleted from SSTables, ScyllaDB puts a marker called a tombstone into a new SSTable. This serves a reminder for the database to ignore the original data as being deleted. |

| Compactions | After a number of SSTables are written to disk ScyllaDB knows to run a compaction, a process that stores only the latest copy of a record, and deleting any records marked with tombstones. Once the new compacted SSTable is written, the old, obsolete SSTables are deleted and space is freed up on disk. |

| Compaction Strategy | ScyllaDB uses different algorithms, called strategies, to determine when and how best to run compactions. The strategy determines trade-offs between write, read and space amplification. ScyllaDB Enterprise even supports a unique method called Incremental Compaction Strategy which can significantly save on disk overhead. |

| Drivers | ScyllaDB communicates with applications via drivers for its Cassandra-compatible CQL interface, or with SDKs for its DynamoDB API. You can use multiple drivers or clients connecting to ScyllaDB at the same time to allow for greater overall throughput. Drivers written specifically for ScyllaDB are shard-aware, thus allowing for direct communication with nodes wherein data is stored, thus providing faster access and better performance. You can see a complete list of drivers available for ScyllaDB here. |

Tips and Tricks for Maximizing ScyllaDB PerformanceA Guide to Getting the Most from Your ScyllaDB Database

Apache® and Apache Cassandra® are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. Amazon DynamoDB® and Dynamo Accelerator® are trademarks of Amazon.com, Inc. No endorsements by The Apache Software Foundation or Amazon.com, Inc. are implied by the use of these marks.